Previously, deploying applications was as simple as hosting them on a server or a single workstation. We relied on solutions such as Apache and IIS, mostly resolving issues or patching vulnerabilities via updates and hotfixes 🧪. However, things have changed dramatically since 2010—14 years ago—when organizations started to move from traditional VPS installations to cloud-based solutions. This change obviously enhanced security and operational efficiency, but it has also introduced additional challenges and vulnerabilities.

Today, the deployment process is more structured and sophisticated. We're no longer merely delivering applications; we need to understand their precise requirements, the versions in use, and all of their dependencies. This move has made our work more formal and hard, as administering new apps necessitates an extensive knowledge of their architecture and context.

Table of Contents

- Introduction

- Why Container Security Matter?

- What is Trivy?

- Setting Up Trivy in GitHub Actions

- Best Practices

- Code

- Conclusion

Why Container Security Matter?

Just like an NPM package is created every second, I could argue that CVEs emerge just as quickly—maybe not in a second, but certainly fast. These vulnerabilities emerge to help us identify risks and resolve them before they escalate into serious problems.

A CVE (Common Vulnerabilities and Exposures) is a list of publicly known security vulnerabilities that exist in software or hardware. Each CVE has a unique ID and specifies a specific vulnerability, allowing businesses to discover and address security vulnerabilities before they are exploited.

This table contains a variety of significant software that has had notable CVEs:

| Rank | Software | Number of CVEs | Notable Vulnerability Example | Description |

|---|---|---|---|---|

| 1 | Linux Kernel | 1000+ | CVE-2020-25712 (Privilege Escalation) | A core part of many operating systems, the Linux kernel often has numerous vulnerabilities related to hardware interfaces and memory management. |

| 2 | Apache HTTP Server | 400+ | CVE-2017-15715 (Remote Code Execution) | A widely used web server, Apache HTTP Server frequently faces vulnerabilities related to web protocols and server misconfigurations. |

| 3 | WordPress | 500+ | CVE-2018-6389 (Cross-Site Scripting) | Popular CMS with numerous plugins and themes, leading to many security flaws that can affect websites and user data. |

| 4 | OpenSSL | 200+ | CVE-2014-0160 (Heartbleed) | Provides cryptographic functions to many systems; vulnerabilities often involve weak encryption or improper handling of secure communications. |

| 5 | Microsoft Windows | 1000+ | CVE-2020-0601 (Cryptographic Issue) | The world’s most widely used OS has long been a target for a broad range of exploits across services and applications. |

| 6 | Java (JDK) | 1000+ | CVE-2016-0603 (Remote Code Execution) | The Java Development Kit has many vulnerabilities, especially in its older versions, affecting enterprise software globally. |

| 7 | Docker | 100+ | CVE-2019-5736 (Privilege Escalation) | As the leading containerization tool, Docker has numerous vulnerabilities in container security and Docker daemon configurations. |

| 8 | Node.js | 150+ | CVE-2018-12115 (Denial of Service) | Node.js, as a runtime for JavaScript, often has CVEs related to dependency management and security flaws in asynchronous code execution. |

| 9 | Adobe Flash | 800+ | CVE-2015-0313 (Remote Code Execution) | Although no longer supported, Flash once had numerous security issues, often involving memory corruption and exploits via web browsers. |

| 10 | Python | 200+ | CVE-2018-1000800 (Arbitrary Code Execution) | Python’s broad usage across web apps and server environments has made it a common target for vulnerabilities, especially in third-party libraries. |

As you can see, the various components of the programs you develop or use as services frequently have a large number of known vulnerabilities. This makes it essential to frequently check for updates and ensure that your services and applications are patched as soon as possible. Failure to do so could result in major problems, particularly when essential applications are in production and adding value to your business. Without regular maintenance, you risk an unexpected failure 💥 that could disrupt operations at any moment.

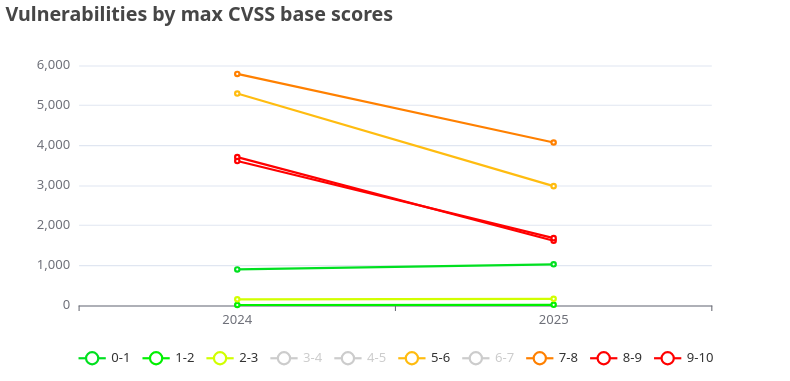

Fortunately, CVE fixes have significantly improved from 2024 to 2025. It’s clear that both organizations and individual developers are increasingly focused on resolving vulnerabilities, aiming to reduce risk and ensure smoother operations. This progress allows for more ☕ coffee time without worrying about critical security gaps. We can only hope that this positive trend continues as we move forward.

What is Trivy?

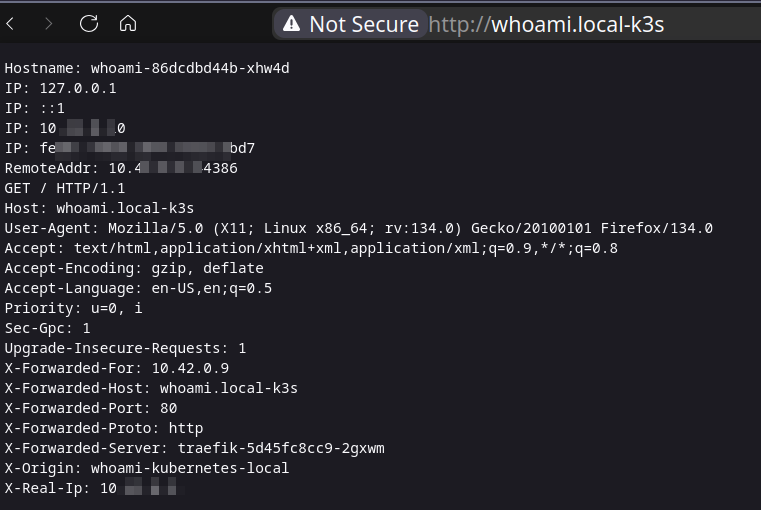

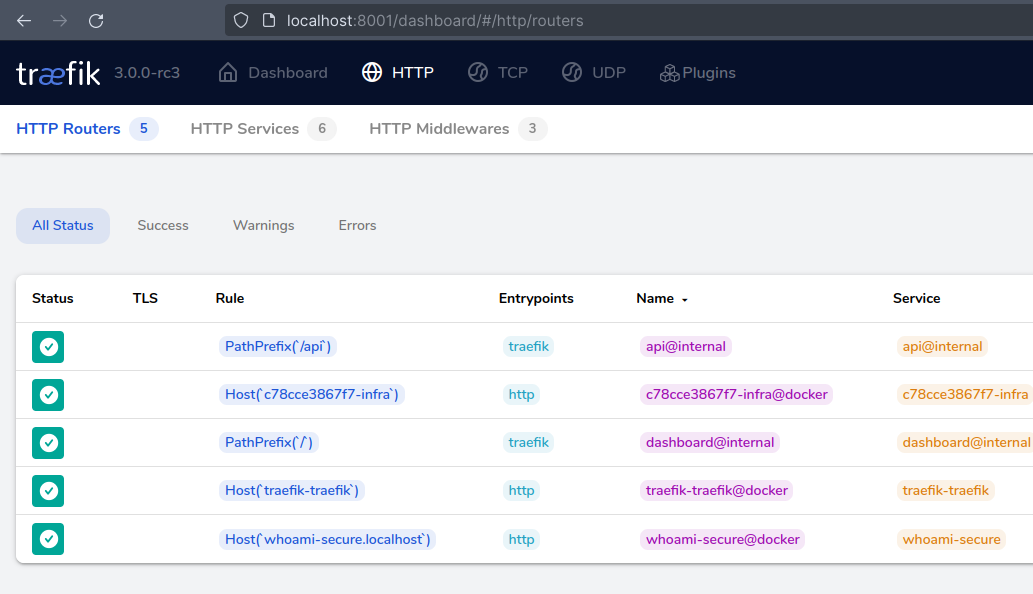

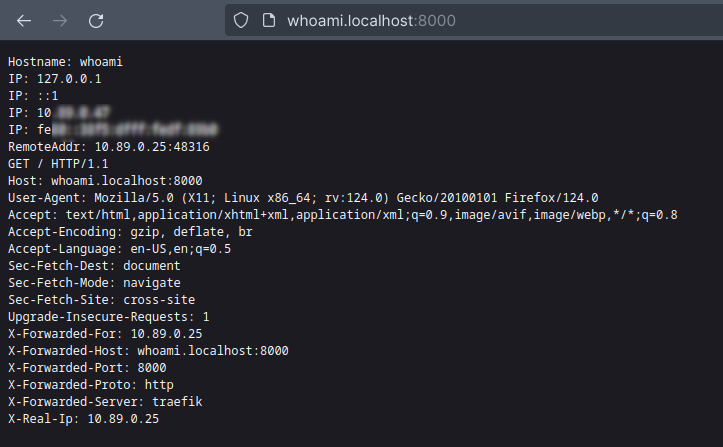

In terms of security, Trivy offers an effective solution for checking your container images on a regular basis for vulnerabilities. However, as self-hosted applications gain popularity, there is a growing trend of exposing ports for communication via HTTP, UDP, TCP, gRPC, and other protocols, providing interaction with both internal and external systems. This change adds new complexity to securing not only container images but also communication links between services. So exploits became common, turning this into an acknowledged vulnerability.

Trivy also monitors your IaC for missing setups, which might lead to vulnerabilities. For example, a Kubernetes deployment with unchecked container capabilities can be vulnerable to exploits.

Trivy, developed by Aqua Security, is a popular open-source vulnerability scanner designed for modern DevSecOps workflows. Its standout qualities — speed, simplicity, and comprehensive coverage — make it a preferred choice among developers and security professionals alike.

⚡ Fast scanning.

Trivy is designed for performance. It runs rapid scans by caching results locally and utilizing little system resources. This makes it appropriate not only for CI/CD pipelines, but also for local development environments where developers want rapid feedback.

- Low latency: Initial scans download vulnerability databases; subsequent scans are nearly instant.

- Optimized for CI/CD: Integrates easily with GitHub Actions, GitLab CI, Jenkins, and other tools.

🧩 Simple to use.

One of Trivy's most significant advantages is its ease of use, from installation to execution.

A single binary requires no complex setup. Simply install and start.

Straightforward CLI: A developer-friendly interface that does not require security knowledge.

Quick onboarding: Teams can begin scanning in minutes.

trivy image nginx:latest

# or with docker

docker run --rm -v /var/run/docker.sock:/var/run/docker.sock aquasec/trivy image nginx:latest

🔐 Comprehensive Scanning Capabilities

Trivy isn't only for container images. It supports a wide range of targets, making it an adaptable tool for safeguarding infrastructure and applications from beginning to finish.

- Container Images (Docker, Podman)

- Operating System Packages (Debian, Alpine, etc.)

- Infrastructure as Code (IaC) (Terraform, Kubernetes manifests, Helm)

- Source Code Repositories

- SBOM (Software Bill of Materials) support

- Git repositories (local or remote)

With Trivy, you can detect:

- CVEs (Common Vulnerabilities and Exposures)

- Misconfigurations

- Secrets (API keys, tokens)

- License compliance issues

🔄 Shift Left Friendly

Trivy enables teams to take a "shift-left" security approach, detecting risks early in the development process before they reach production. This results in fewer surprises and less security debt.

📦 Example Use Cases

- Scan a Docker image for known vulnerabilities

- Audit a Kubernetes YAML file for insecure defaults

- Validate Terraform infrastructure for misconfigurations

- Check for exposed secrets in your Git repo

In short, Trivy offers a uniform toolkit for scanning across the software lifecycle, delivering useful information at a minimal overhead. Whether you're developing microservices or managing cloud-native infrastructure, Trivy keeps your stack secure without slowing you down.

Setting Up Trivy in GitHub Actions

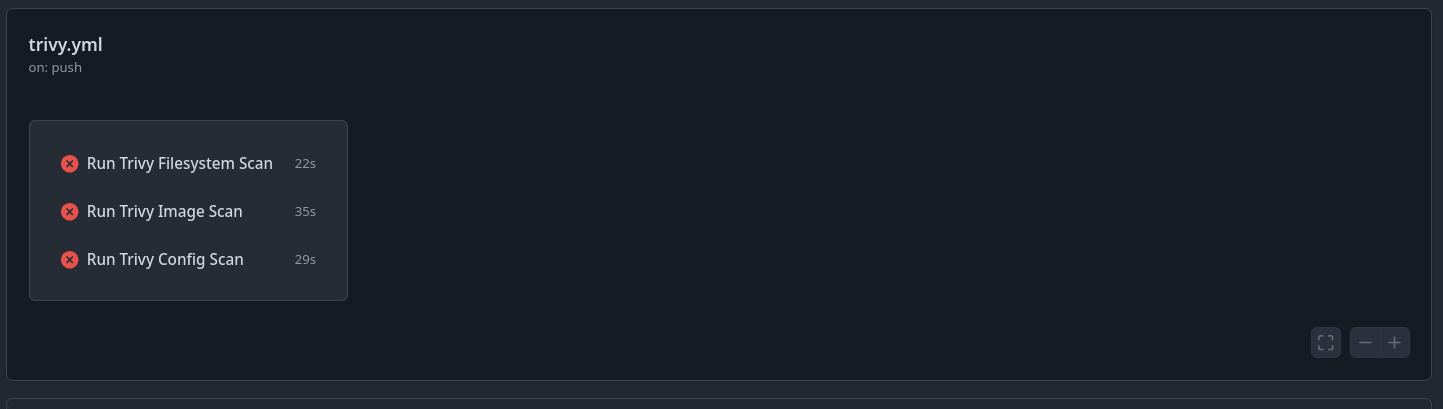

Let's create a CI pipeline that uses GitHub Actions to run Trivy on each contribution. This allows us to detect and avoid important security issues before they reach production.

In a fast-paced development environment, it's tempting to become excited about releasing new features — but it's also crucial to remember that insecure code can harm the business, users, and your team. So, take responsibility for your code's security: avoid older or unmaintained packages, don't introduce untrusted dependencies, and always think before installing.

Security is not simple, but it is your responsibility, and I believe in you. Let's create safer software one commit at a time.

Here's a GitHub Actions workflow to execute Trivy on any pushed commit:

name: Trivy Scan

on:

push:

branches: [ "main" ]

pull_request:

branches: [ "main" ]

permissions:

contents: read

jobs:

fs-scan:

runs-on: ubuntu-latest

name: Run Trivy Filesystem Scan

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Run Trivy vulnerability scanner in fs mode

uses: aquasecurity/trivy-action@master

with:

scan-type: 'fs'

scan-ref: '.'

trivy-config: trivy/trivy.yaml

severity: 'HIGH,CRITICAL'

exit-code: 2

image-scan:

runs-on: ubuntu-latest

name: Run Trivy Image Scan

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Build Docker image

run: docker build -f Containerfile -t local-nginx .

- name: Run Trivy vulnerability scanner in image mode

uses: aquasecurity/trivy-action@master

with:

scan-type: 'image'

image-ref: 'local-nginx'

trivy-config: trivy/trivy.yaml

severity: 'HIGH,CRITICAL'

exit-code: 2

config-scan:

runs-on: ubuntu-latest

name: Run Trivy Config Scan

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Run Trivy vulnerability scanner in config mode

uses: aquasecurity/trivy-action@master

with:

scan-type: 'config'

scan-ref: '.'

trivy-config: trivy/trivy.yaml

severity: 'HIGH,CRITICAL'

exit-code: 2As you can see, I utilized three scanning methods: fs to scan the local file system directory and image to scan a custom container image that was created and made available locally and config to scan IaC.

The Trivy file settings (trivy.yaml):

severity:

- HIGH

- CRITICAL

ignore-unfixed: true

ignore:

vulnerabilities:

- CVE-2021-12345

- CVE-2022-67890

output:

format: table

scan:

config:

enabled: true

secrets:

enabled: true

iac:

enabled: true

policies:

- terraform

- kubernetes

timeout: 5m

- severity: Defines the severity levels that your scan will treat as vulnerabilities.

- ignore: This section allows you to specify which CVEs to bypass during the scan, but use with caution as it’s at your own risk.

- scan: Here, you can choose the type of scans to run, such as scanning Docker images, file systems, or Infrastructure as Code (IaC).

🧪 Output (trimmed)

Here, we have the outputs from Trivy for three different types of scans: container image, file system, and Infrastructure as Code (IaC).

I created files with vulnerabilities to demonstrate Trivy in action. These include insecure IaC, secrets exposed in a file, and a vulnerable container image.

Report Summary

┌─────────────────────────────┬────────┬─────────────────┬─────────┐

│ Target │ Type │ Vulnerabilities │ Secrets │

├─────────────────────────────┼────────┼─────────────────┼─────────┤

│ nginx:latest (debian 12.10) │ debian │ 154 │ - │

└─────────────────────────────┴────────┴─────────────────┴─────────┘

Legend:

- '-': Not scanned

- '0': Clean (no security findings detected)

nginx:latest (debian 12.10)

Total: 154 (UNKNOWN: 2, LOW: 99, MEDIUM: 39, HIGH: 12, CRITICAL: 2)

┌────────────────────┬─────────────────────┬──────────┬──────────────┬─────────────────────────┬───────────────┬──────────────────────────────────────────────────────────────┐

│ Library │ Vulnerability │ Severity │ Status │ Installed Version │ Fixed Version │ Title │

├────────────────────┼─────────────────────┼──────────┼──────────────┼─────────────────────────┼───────────────┼──────────────────────────────────────────────────────────────┤

│ apt │ CVE-2011-3374 │ LOW │ affected │ 2.6.1 │ │ It was found that apt-key in apt, all versions, do not │

│ │ │ │ │ │ │ correctly... │

│ │ │ │ │ │ │ https://avd.aquasec.com/nvd/cve-2011-3374 │

AVD-AWS-0132 (HIGH): Bucket does not encrypt data with a customer managed key.

═══════════════════════════════════════════════════════════════════

Encryption using AWS keys provides protection for your S3 buckets. To increase control of the encryption and manage factors like rotation use customer managed keys.

See https://avd.aquasec.com/misconfig/avd-aws-0132

───────────────────────────────────────────────────────────────────

trivy/example.tf:24-31

───────────────────────────────────────────────────────────────────

24 ┌ resource "aws_s3_bucket" "insecure_bucket" {

25 │ bucket = "insecure-bucket-example"

26 │ acl = "public-read"

27 │

28 │ versioning {

29 │ enabled = false

30 │ }

31 └ }

AVD-KSV-0014 (HIGH): Container 'vulnerable-container' of Deployment 'vulnerable-deployment' should set 'securityContext.readOnlyRootFilesystem' to true

═══════════════════════════════════════════════════════════════════

An immutable root file system prevents applications from writing to their local disk. This can limit intrusions, as attackers will not be able to tamper with the file system or write foreign executables to disk.

See https://avd.aquasec.com/misconfig/ksv014

───────────────────────────────────────────────────────────────────

trivy/deployment.yml:18-34

───────────────────────────────────────────────────────────────────

18 ┌ - name: vulnerable-container

19 │ image: nginx:1.16

20 │ ports:

21 │ - containerPort: 80

22 │ env:

23 │ - name: SECRET_KEY

24 │ value: "hardcoded-secret"

25 │ securityContext:

26 └ privileged: true

HIGH: AsymmetricPrivateKey (private-key)

════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════════

Asymmetric Private Key

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

trivy/secrets.txt:10

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

8 "project_id": "fake-project-id",

9 "private_key_id": "fakeprivatekeyid12345",

10 [ "private_key": "-----BEGIN PRIVATE KEY-----******************-----END PRIVATE KEY-----\n",

11 "client_email": "[email protected]",

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Trust me 🤝, you can check the results directly in the GitHub CI:

Best Practices

Frequent scans

Running vulnerability scans on each commit is secure, but it may be costly 💸🤑💰, particularly in businesses with hundreds of developers and several projects. Your CI pipeline may struggle under the pressure, affecting performance and developer productivity.

Instead, consider examining your CI phases and selecting the appropriate time to execute security tests. As your procedures evolve, you can use common, "pre-scanned" base images across projects to reduce scanning time while retaining a high level of security.

Prioritize high and critical vulnerabilities

Make sure your workflow fails when critical or high-severity vulnerabilities are discovered. Detecting vulnerabilities early is critical, but not every vulnerability should halt development. According to your risk tolerance and business environment, low or medium-level vulnerabilities may be acceptable, but don't wait until they reach high levels to pay attention 🧐.

It is critical to determine which severity levels are undesirable in production and which are manageable. This decision should be part of an ongoing, open discussion between 👨💻development and 👮security teams to find the correct balance between safety and productivity.

Regular updates

Keeping your base images and dependencies up to date is one of the best strategies to reduce vulnerabilities. Scanning helps you detect worries early on, but it's also critical to understand why those vulnerabilities exist in your stack.

For example, if your application depends on too many libraries, you run the danger of importing deprecated or unmaintained packages. In ecosystems like NPM, a single dependent might pull in dozens of transitive dependencies, sometimes for functionality you rarely use. In such circumstances, consider creating lightweight alternatives or including only what you require.

Be cautious of using trendy frameworks or libraries simply because they're popular. If they stop receiving updates or support from the community, you could be left with a security risk 🧠.

Don't make decisions based solely on emotions ❤️; remember the wisdom of this Bible verse:

Jeremiah 17:9 (NIV):

"The heart is deceitful above all things and beyond cure. Who can understand it?"

While solutions like Trivy promote a culture of regular updates, it is your obligation to ensure that your team avoids unmaintained or obsolete dependencies - prevention begins with smart choices.

Outputs on CI

Depending on your CI volume and team size, reviewing scan results immediately within the CI pipeline might be confusing and hard to read, making it 🥴 challenging for developers to identify and resolve vulnerabilities effectively.

To optimize this process, Trivy reports can be integrated with other tools, such as SonarQube, to improve the user experience, consolidate security issues, and provide more explicit solutions. This integration helps you organize vulnerability data, streamline issue tracking, and enable developers to focus on fixing significant problems rather than becoming lost in a sea of scan results.

Code

💡 Feel free to clone this repository, which contains related files:

Conclusion

That’s all for today, folks! I hope you enjoyed this quick dive into Trivy.

My goal was to raise awareness about security and share practical tools and best practices that you can apply in your daily development workflow.

Stay safe, write secure code, never stop learning and keep your kernel 🧠 up to date!

References

]]>☕ Pegue seu café e bora mergulhar no artigo!

Porque entender o que acontece com

Este artigo é pra você que quer entender a fundo aquela aplicação que misteriosamente começa a apresentar problemas... geralmente no fim da tarde de uma sexta-feira. Acertei? 😅

☕ Pegue seu café e bora mergulhar no artigo!

Porque entender o que acontece com sua aplicação antes do caos da sexta à tarde é sempre uma boa ideia. 😃

Guia de bordo

- Os 3 Pilares da Observabilidade

- O que é OpenTelemetry?

- Cloud Native Computing Foundation (CNCF)

- Benefícios do OpenTelemetry

- Entregando observabilidade

- Chega de Teoria, Vamos Codar!

- Configurando o OpenTelemetry

- Instrumentação

- Como testar?

- Conclusão

🧱 Os 3 Pilares da Observabilidade

A observabilidade moderna se apoia em três pilares principais:

- 📊 Métricas: mostram o estado da aplicação ao longo do tempo (como uso de CPU, requisições por segundo, latência etc.).

- 🧻 Logs: registram eventos e mensagens que ajudam a entender o que aconteceu em determinado momento.

- 🔍 Traces: acompanham o caminho de uma requisição de ponta a ponta, revelando gargalos e dependências entre serviços.

Juntos, esses três pilares ajudam a responder uma das perguntas mais importantes no dia a dia de quem opera sistemas: “Por que meu sistema está se comportando desse jeito?”

E é exatamente aí que o OpenTelemetry entra em cena — fornecendo as ferramentas necessárias para coletar, padronizar e correlacionar métricas, logs e traces, tudo em um único ecossistema.

O que é OpenTelemetry?

É uma iniciativa de código aberto que tem como objetivo estabelecer um padrão unificado para a coleta, o processamento e a exportação de dados de telemetria. Trata-se de uma solução completa para monitorar o desempenho, o comportamento e a saúde de aplicações e sistemas, oferecendo visibilidade total sobre o que está acontecendo em seu ambiente.

Cloud Native Computing Foundation (CNCF)

Se você já se perdeu no meio de logs, métricas e traces tentando entender o que tá pegando na sua aplicação, respira fundo — você não está sozinho. A boa notícia é que a galera da comunidade open source também sentia essa dor… e decidiu fazer algo a respeito.

Foi daí que nasceu o OpenTelemetry, uma ferramenta que veio ao mundo como resultado da união de dois projetos que já estavam mandando bem: OpenTracing e OpenCensus. Em vez de continuar com iniciativas separadas, a comunidade juntou forças 🔥 pra criar um padrão único e poderoso de observabilidade.

Essa fusão virou realidade e, em outubro de 2022, rolou o lançamento da primeira versão estável do OpenTelemetry. Desde então, o projeto só cresceu e virou queridinho de quem trabalha com sistemas distribuídos, microsserviços e Kubernetes.

E sabe o que é mais legal? O projeto é mantido pela Cloud Native Computing Foundation (CNCF) — a mesma galera por trás do Kubernetes, Prometheus, Envoy e outros pesos pesados do mundo cloud native.

Ou seja, se você quer observabilidade de verdade no seu sistema, sem gambiarra e com suporte da comunidade, o OpenTelemetry é o caminho.

Benefícios do OpenTelemetry

🔍 Visibilidade Aprimorada

Com o OpenTelemetry, você ganha olhos de águia sobre seus sistemas. É possível monitorar desempenho, comportamento e saúde em tempo real, o que significa que nada passa despercebido — de latências inesperadas a gargalos ocultos.

🛠️ Diagnóstico e Resolução de Problemas Sem Dor de Cabeça

Ao oferecer uma telemetria completa, padronizada e consistente, o OpenTelemetry facilita (e muito!) a identificação da raiz dos problemas. Isso se traduz em respostas mais rápidas, menos tempo de inatividade e menos estresse na madrugada.

📊 Decisões Guiadas por Dados Reais

Nada de achismos. Com dados confiáveis em mãos, fica mais fácil tomar decisões estratégicas: otimizar o desempenho da aplicação, identificar padrões de uso e até direcionar recursos com mais inteligência.

🔄 Interoperabilidade e Flexibilidade de Verdade

OpenTelemetry não te prende a nenhuma ferramenta específica. Ele é agnóstico de fornecedor, permitindo integração com diversos backends populares como Prometheus, Jaeger, Zipkin, Datadog, entre outros. Isso dá liberdade pra montar o stack de observabilidade que fizer mais sentido pro seu time

Principais recursos do OpenTelemetry

O OpenTelemetry oferece bibliotecas (SDKs) prontas para você instrumentar suas aplicações de forma simples e eficiente. Com elas, é possível coletar dados de telemetria como métricas, logs e traces, tudo de maneira padronizada.

⚒ Instrumentação

Esses SDKs são compatíveis com as principais linguagens de programação do mercado, incluindo C++, C#, Elixir, Go, Java, Lua, Ruby, Rust, Python e JavaScript, permitindo que você adote observabilidade de ponta a ponta, independentemente da stack que estiver usando.

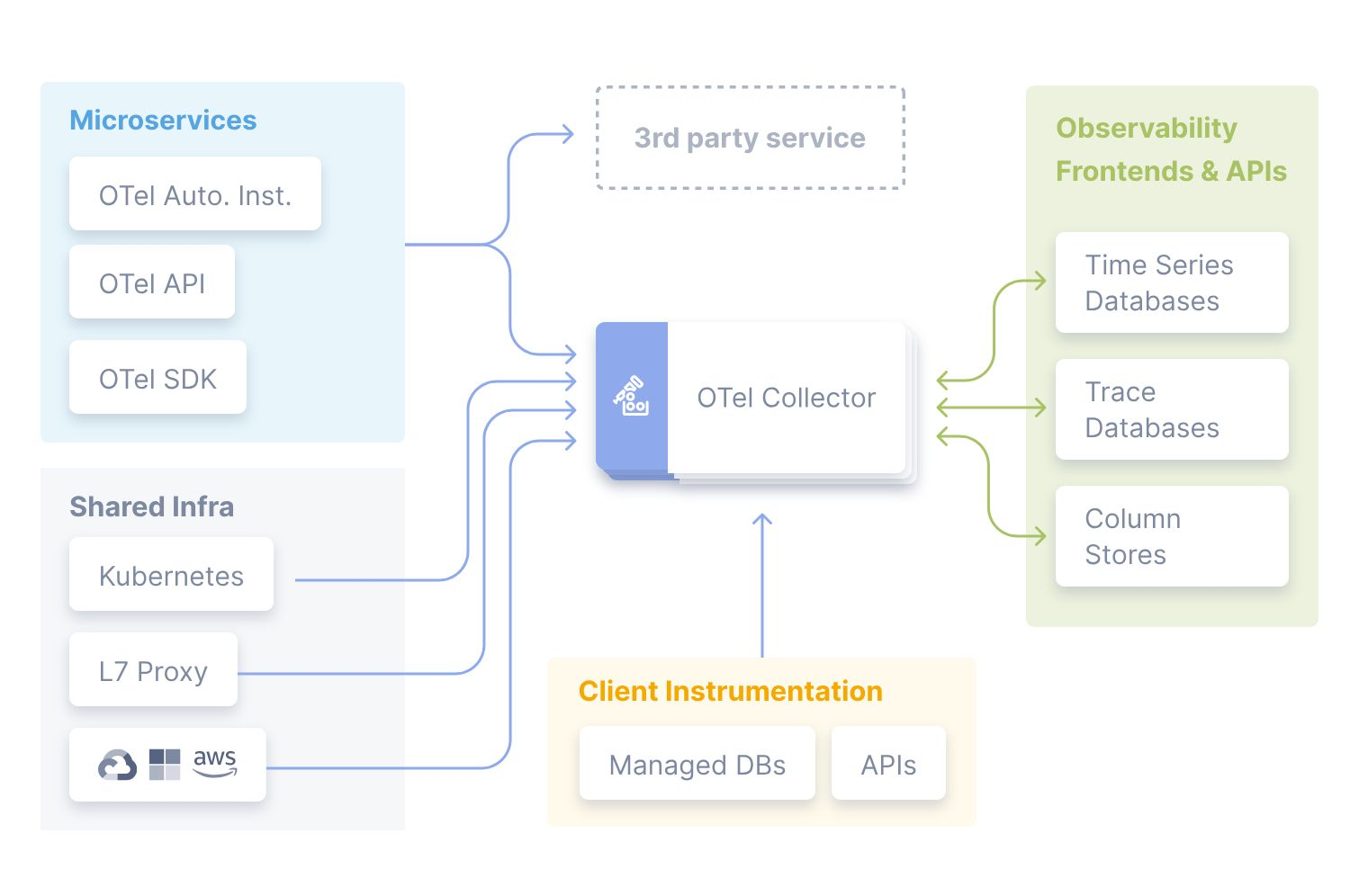

🗑 Coleta e Processamento Inteligente

O OpenTelemetry conta com um coletor centralizado (o famoso OpenTelemetry Collector) que funciona como um hub de dados de telemetria. Ele recebe informações vindas de diversas fontes — como aplicações, agentes e sidecars — e faz o trabalho pesado: agrega, filtra, transforma e roteia esses dados antes de enviá-los para a ferramenta de análise ou observabilidade da sua escolha.

Com isso, você ganha mais controle, eficiência e flexibilidade, além de aliviar o trabalho das suas aplicações, que não precisam se preocupar com a exportação direta dos dados.

🚚 Exportação para Onde Você Quiser

Com o OpenTelemetry, seus dados não ficam presos em um lugar só. Ele suporta diversos backends de telemetria, permitindo que você exporte métricas, logs e traces para as plataformas de observabilidade e análise que já usa — como Prometheus, Jaeger, Zipkin, Datadog, New Relic, entre outros.

Essa flexibilidade garante que você possa escolher a melhor ferramenta para cada necessidade, sem abrir mão da padronização e consistência na coleta dos dados.

🧰 Componentes Essenciais do OpenTelemetry

🎯 Instrumentação

As bibliotecas de instrumentação são integradas diretamente às suas aplicações, permitindo a coleta automática (ou manual) de métricas, logs e traces. É com elas que você começa a dar visibilidade ao que acontece "debaixo do capô" dos seus serviços.

🗃️ Coletores (Collectors)

O OpenTelemetry Collector atua como uma central de inteligência: recebe dados de telemetria de múltiplas fontes, processa, transforma e filtra as informações antes de enviá-las aos destinos configurados. Ele alivia o trabalho das aplicações e garante mais controle sobre o fluxo dos dados.

📦 Exportadores (Exporters)

Os exportadores são responsáveis por enviar os dados processados para os backends de observabilidade. O OpenTelemetry já traz suporte a várias ferramentas populares, como Prometheus, Jaeger, Zipkin, Datadog, New Relic, entre outras.

📊 Backends de Telemetria

São as plataformas onde os dados ganham vida: eles armazenam, processam e visualizam as métricas, logs e traces coletados. É aqui que você consegue entender o desempenho, o comportamento e a saúde da sua aplicação com dashboards, alertas e análises avançadas.

🛡️ E os dados sensíveis? Relaxa, dá pra proteger!

Quando estamos lidando com dados sensíveis, como informações de cartão de crédito, CPF/CNPJ, e-mails ou qualquer outro dado pessoal, é essencial garantir que nada disso vaze nos seus traces.

Com o OpenTelemetry, é possível remover ou mascarar esses dados antes mesmo que eles sejam exportados, usando um componente chamado OTel Processor. Esse processador permite filtrar, anonimizar ou transformar campos específicos no pipeline de telemetria, garantindo conformidade com LGPD, GDPR e outras regulamentações de privacidade.

Ou seja, você continua com visibilidade total do sistema, mas sem comprometer a segurança e a privacidade dos seus usuários.

O que o OpenTelemetry coleta?

O OpenTelemetry (OTel) funciona como um verdadeiro detetive digital: ele coleta e exporta tudo o que você precisa saber sobre sua aplicação — sem complicar.

Como mencionamos anteriormente, ele se apoia nos três pilares da observabilidade:

📊 Métricas, 🧻 Logs e 🔍 Traces.

Suporte as linguagens

| Linguagem | Suporte OpenTelemetry | Estágio |

|---|---|---|

| Java | ✅ Sim | Estável |

| JavaScript (Node.js/Browser) | ✅ Sim | Estável |

| Python | ✅ Sim | Estável |

| Go | ✅ Sim | Estável |

| .NET (C#) | ✅ Sim | Estável |

| Ruby | ✅ Sim | Estável |

| C++ | ✅ Sim | Em evolução |

| PHP | ⚠️ Parcial | Em desenvolvimento |

| Rust | ⚠️ Parcial | Comunidade ativa |

| Swift | ⚠️ Parcial | Projeto comunitário |

| Erlang/Elixir | ⚠️ Parcial | Projeto comunitário |

🔎 Observação: As linguagens com suporte “parcial” ou “em desenvolvimento” ainda estão evoluindo ou são mantidas pela comunidade.

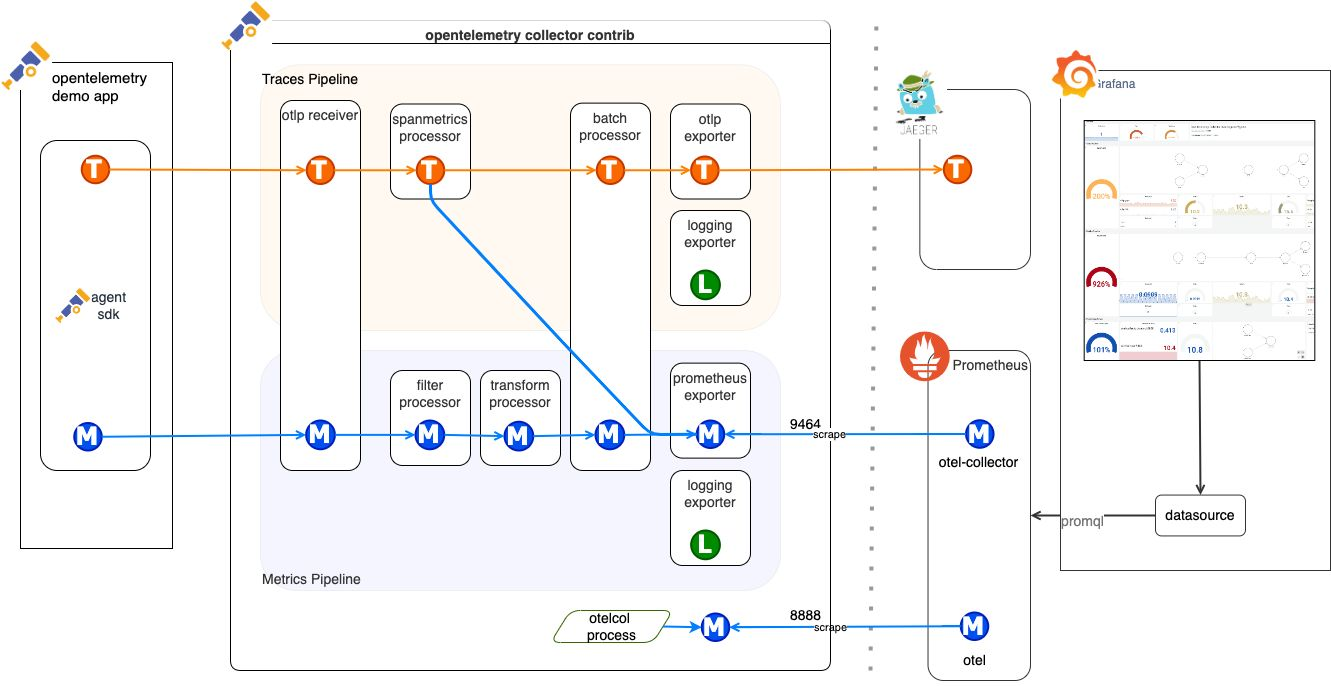

Entregando observabilidade

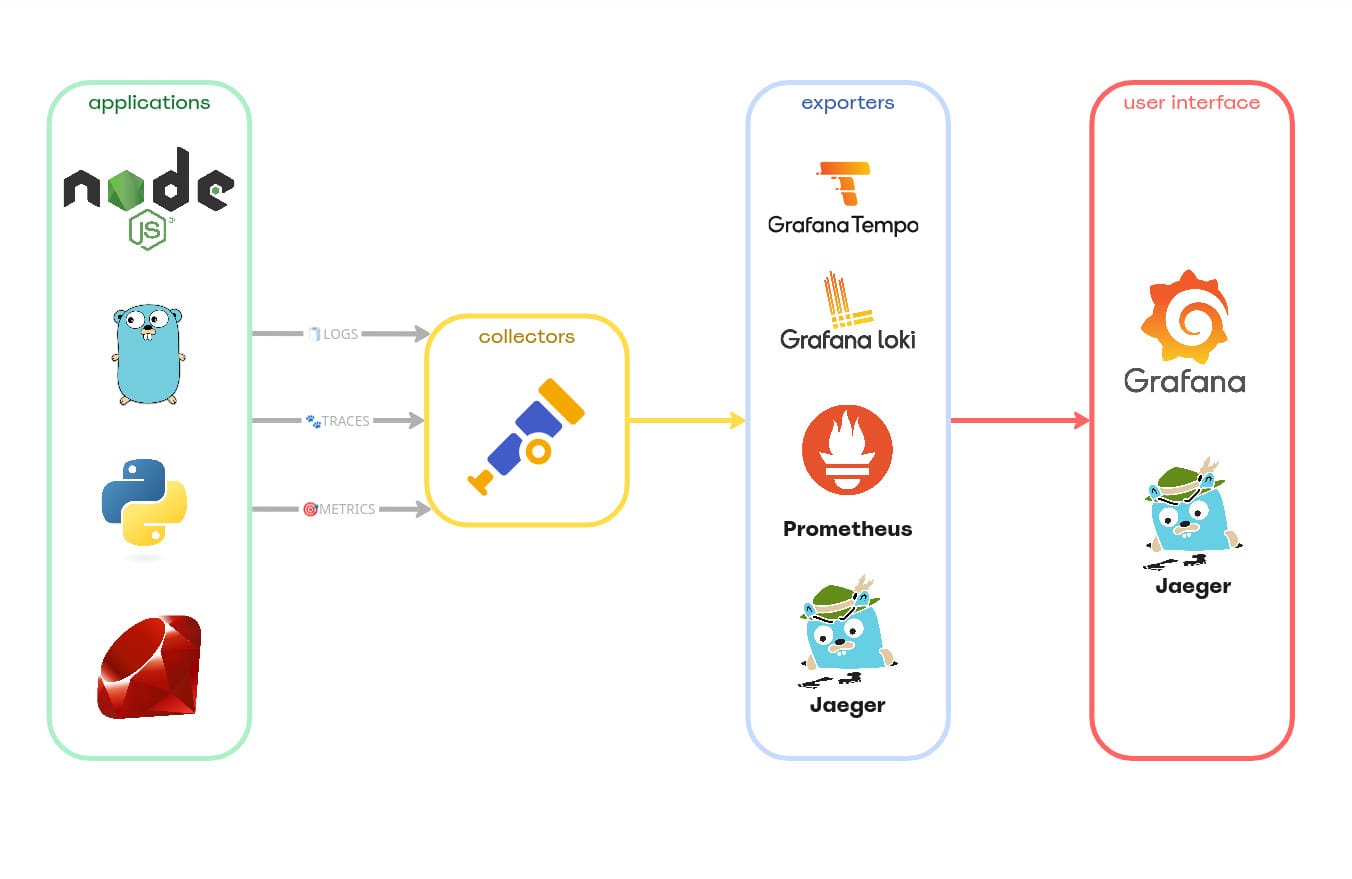

No diagrama acima, visualizamos as principais camadas de uma arquitetura de observabilidade. Iniciando pela camada das aplicações, que são as responsáveis por gerar os insumos fundamentais: logs, traces e métricas. Em seguida, entra o OpenTelemetry Collector, que atua como intermediário — ele recebe, processa e transforma esses dados, encaminhando-os para um ou mais exporters, que se encarregam de armazená-los ou redirecioná-los para ferramentas especializadas.

Por fim, temos a camada de apresentação, composta por interfaces de visualização (UIs), onde é possível construir dashboards, configurar alertas e executar queries sobre os dados coletados. Neste exemplo, usamos apenas duas ferramentas, mas a arquitetura é flexível e compatível com diversas soluções comerciais amplamente adotadas no mercado, como Datadog, New Relic, Kibana, entre outras.

Se o objetivo for algo mais simples, especialmente em ambientes de desenvolvimento, também é possível enviar os dados diretamente para o Jaeger, que oferece uma visualização prática e eficiente dos três pilares da observabilidade: métricas, logs e traces.

Chega de Teoria, Vamos Codar!

Há algum tempo, iniciei algumas POCs com o objetivo de descomplicar a instrumentação com OpenTelemetry em aplicações escritas nas diversas linguagens que compunham a stack com a qual eu estava trabalhando.

Durante esse processo, acabei mudando de empresa — mas continuei esse estudo, evoluindo a ideia e consolidando o aprendizado em uma apresentação resumida, que agora serve de base para este artigo.

Como mencionei antes, estamos lidando com várias linguagens, então a proposta aqui é mostrar rapidamente trechos de implementação, só pra dar o gostinho. E claro: o repositório com todos os exemplos está disponível — você pode clonar, testar e entender na prática como a instrumentação com OpenTelemetry funciona em cada caso:

Configurando o OpenTelemetry

A configuração do colector (OTel)

- otel-collector-config.yaml

# OpenTelemetry Collector config that receives OTLP

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

http:

endpoint: "0.0.0.0:4318"

processors:

batch:

send_batch_size: 1024

timeout: 5s

attributes/scrape:

actions:

- key: environment

value: production

action: insert

- key: process.command_line

action: delete

- pattern: credit_card

action: delete

- pattern: password

action: delete

- pattern: email

action: hash

- pattern: vatnumber

action: hash

- pattern: document

action: hash

- pattern: x_secret_key

action: hash

exporters:

debug:

verbosity: detailed

otlp/jaeger:

endpoint: jaeger:4317

tls:

insecure: true

loki:

endpoint: http://loki:3100/loki/api/v1/push

prometheus:

endpoint: 0.0.0.0:18888

otlp/tempo:

endpoint: tempo:4317

tls:

insecure: true

extensions:

zpages: {}

service:

extensions: [zpages]

pipelines:

traces:

receivers: [otlp]

processors: [attributes/scrape, batch]

exporters: [otlp/jaeger, otlp/tempo]

metrics:

receivers: [otlp]

processors: [attributes/scrape, batch]

exporters: [prometheus]

logs:

receivers: [otlp]

processors: [attributes/scrape, batch]

exporters: [loki]

Nesta configuração, o OpenTelemetry Collector não se limita apenas à coleta de dados — também configuramos etapas de processamento para demonstrar todo o potencial da ferramenta. O objetivo é mostrar como o coletor pode transformar dados em tempo real, seja inserindo novos campos, removendo informações desnecessárias ou mascarando dados sensíveis para evitar exposições indesejadas.

Abaixo, explicamos os principais blocos da configuração:

- receivers: definem os endpoints de entrada por onde o coletor recebe os dados. É aqui que especificamos quais protocolos ou fontes o OpenTelemetry Collector deve escutar.

- processors : responsáveis por transformar os dados recebidos. Podemos, por exemplo, remover informações sensíveis, adicionar marcações personalizadas (como tags de ambiente ou origem), ou filtrar dados irrelevantes, garantindo que apenas informações úteis sejam enviadas para os destinos finais.

- exporters: definem os destinos para onde os dados de observabilidade serão enviados. Entre as opções mais comuns estão o Jaeger, DataDog, Prometheus, entre outros sistemas de monitoramento e rastreamento.

- service: é aqui que configuramos as pipelines de execução para cada um dos pilares da observabilidade — logs, traces e métricas. Cada pipeline determina quais são os seus respectivos receivers, processors e exporters, orquestrando o fluxo completo dos dados dentro do coletor.

Os containers

- docker-compose.yml

name: otel

services:

otel:

profiles: [all, otel]

networks:

- otel

ports:

- 14317:4317

- 14318:4318

- 18888:18888

image: otel/opentelemetry-collector-contrib:0.123.0

command: ["--config=/conf/otel-collector-config.yaml"]

privileged: true

volumes:

- "./otel/otel-collector-config.yaml:/conf/otel-collector-config.yaml"

depends_on:

- loki

jaeger:

profiles: [all, otel]

networks:

- otel

image: jaegertracing/all-in-one:1.68.0

ports:

- 16687:16686

depends_on:

- otel

prometheus:

profiles: [all, otel]

networks:

- otel

image: prom/prometheus:v3.2.1

ports:

- "9090:9090"

volumes:

- prometheus-data:/prometheus

- ./prometheus/prometheus.yml:/etc/prometheus/prometheus.yml

grafana:

profiles: [all, grafana]

networks:

- otel

image: grafana/grafana:11.6.0

ports:

- "3000:3000"

environment:

- GF_SECURITY_ADMIN_PASSWORD=admin

volumes:

- grafana-data:/var/lib/grafana

depends_on:

- loki

- tempo

loki:

profiles: [all, grafana]

networks:

- otel

image: grafana/loki:3

command: [ "-config.file=/etc/loki/local-config.yaml" ]

ports:

- "3100:3100"

volumes:

- ./grafana/loki-config.yaml:/etc/loki/local-config.yaml

- loki-rules-data:/etc/loki/rules/fake

promtail:

profiles: [all, grafana]

networks:

- otel

image: grafana/promtail:3

volumes:

- ./grafana/promtail-config.yaml:/etc/promtail/config.yml

- /var/log:/var/log

- /var/lib/docker/containers:/var/lib/docker/containers

depends_on:

- loki

- prometheus

tempo:

profiles: [all, grafana]

networks:

- otel

image: grafana/tempo:latest

command: [ "-config.file=/etc/tempo/tempo.yaml" ]

ports:

- "3200:3200"

volumes:

- ./grafana/tempo-config.yaml:/etc/tempo/tempo.yaml

- tempo-data:/var/tempo

redis:

profiles: [all, db]

networks:

- otel

image: bitnami/redis:7.2

environment:

- ALLOW_EMPTY_PASSWORD=yes

ports:

- 6379:6379

python:

profiles: [all, apps]

networks:

- otel

build:

dockerfile: ./app/python/Containerfile

environment:

- PORT=8000

- OTEL_PYTHON_LOGGING_AUTO_INSTRUMENTATION_ENABLED=true

- OTEL_EXPORTER_OTLP_ENDPOINT=http://otel:4317

- OTEL_EXPORTER_OTLP_TRACES_ENDPOINT=http://otel:4317/v1/traces

- OTEL_EXPORTER_OTLP_METRICS_ENDPOINT=http://otel:4317/v1/metrics

- OTEL_RESOURCE_ATTRIBUTES="service.name=python-otlp,team=dev,cluster-name=local,env=dev"

- OTEL_SERVICE_NAME=python-otlp

ports:

- 8000:8000

depends_on:

- otel

go:

profiles: [all, apps]

networks:

- otel

build:

dockerfile: ./app/go/Containerfile

environment:

- OTEL_EXPORTER_OTLP_ENDPOINT=http://otel:4317

- OTEL_RESOURCE_ATTRIBUTES="service.name=go-otlp,team=dev,cluster-name=local,env=dev"

- OTEL_SERVICE_NAME=go-otlp

- REDIS_URL=redis:6379

ports:

- 8001:8001

depends_on:

- otel

ruby:

profiles: [all, apps]

networks:

- otel

build:

dockerfile: ./app/ruby/Containerfile

environment:

- PORT=8002

- OTEL_EXPORTER_OTLP_TRACES_ENDPOINT=http://otel:4318/v1/traces

- OTEL_EXPORTER_OTLP_METRICS_ENDPOINT=http://otel:4318/v1/metrics

- OTEL_RESOURCE_ATTRIBUTES="service.name=ruby-otlp,team=dev,cluster-name=local,env=dev"

- OTEL_SERVICE_NAME=ruby-otlp

- OTEL_TRACES_EXPORTER=otlp

ports:

- 8002:8002

depends_on:

- otel

node:

profiles: [all, apps]

networks:

- otel

build:

dockerfile: ./app/node/Containerfile

environment:

- PORT=8003

- OTEL_EXPORTER_OTLP_ENDPOINT=http://otel:4318

- OTEL_EXPORTER_OTLP_TRACES_ENDPOINT=http://otel:4318/v1/traces

- OTEL_EXPORTER_OTLP_METRICS_ENDPOINT=http://otel:4318/v1/metrics

- OTEL_RESOURCE_ATTRIBUTES="service.name=node-otlp,team=dev,cluster-name=local,env=dev"

- OTEL_SERVICE_NAME=node-otlp

- OTEL_TRACES_EXPORTER=otlp

ports:

- 8003:8003

depends_on:

- otel

volumes:

tempo-data:

loki-rules-data:

grafana-data:

prometheus-data:

networks:

otel:

name: "otel"

- Otel: Abreviação de OpenTelemetry Collector, é o componente responsável por receber, processar e exportar dados de observabilidade como logs, métricas e traces.

- Jaeger: Ferramenta de distributed tracing que permite rastrear o caminho completo de uma requisição em sistemas distribuídos, sendo muito útil em arquiteturas de microserviços para identificar gargalos e monitorar a performance entre serviços.

- Prometheus: Solução de monitoramento que coleta e armazena métricas em tempo real. Possui suporte a consultas avançadas e permite a criação de alertas e dashboards personalizados.

- Grafana: Plataforma web de código aberto para visualização e análise de dados. Suporta múltiplas fontes, como Prometheus, Loki e Tempo, e é amplamente utilizada para criação de dashboards interativos.

- Loki: Sistema de gerenciamento de logs desenvolvido pela Grafana Labs. Ao contrário de outras soluções, como o Elasticsearch, o Loki é otimizado para trabalhar em conjunto com Prometheus e Grafana, utilizando os mesmos rótulos (labels) e focando em logs estruturados e de fácil correlação com métricas e traces.

- Promtail: Agente responsável por coletar logs de arquivos locais e enviá-los ao Loki, o sistema de gerenciamento de logs da Grafana.

- Tempo: Plataforma open-source mantida pela Grafana Labs, voltada para o gerenciamento de distributed tracing. Permite a correlação de traces com métricas e logs em um único ambiente visual.

- Redis: Sistema de cache em memória amplamente utilizado por aplicações. Pensando em testes do contexto de observabilidade, pode gerar spans de traces relacionados a operações de leitura e escrita no cache.

- Python, Ruby, Go e Node: Exemplos de containers de aplicação que atuam como fontes de dados para o coletor OpenTelemetry, fornecendo logs, métricas e traces que alimentam todo o pipeline de observabilidade.

Os arquivos de configuração do Loki, Tempo e Promtail estão disponíveis no repositório e devem ser baixados antes da execução. Eles garantem que cada serviço funcione corretamente dentro do pipeline de observabilidade.

Instrumentação

No repositório, você encontrará diversos exemplos de instrumentação. Neste artigo, vamos focar brevemente na implementação em Go. Por ser uma linguagem compilada, o Go exige uma abordagem mais explícita e detalhada na instrumentação. Já em linguagens interpretadas como Node.js, Ruby e Python, esse processo tende a ser mais simples, graças aos recursos de metaprogramação que facilitam a inserção automática de telemetria no código.

Go

package main

import (

"context"

"encoding/json"

"fmt"

"log"

"os"

"net/http"

"github.com/brianvoe/gofakeit/v7"

"github.com/prometheus/client_golang/prometheus"

"github.com/prometheus/client_golang/prometheus/promauto"

"github.com/prometheus/client_golang/prometheus/promhttp"

"github.com/redis/go-redis/extra/redisotel/v9"

"github.com/redis/go-redis/v9"

"go.opentelemetry.io/contrib/instrumentation/net/http/otelhttp"

"go.opentelemetry.io/otel"

"go.opentelemetry.io/otel/attribute"

"go.opentelemetry.io/otel/exporters/otlp/otlptrace"

"go.opentelemetry.io/otel/exporters/otlp/otlptrace/otlptracegrpc"

"go.opentelemetry.io/otel/sdk/resource"

sdktrace "go.opentelemetry.io/otel/sdk/trace"

"go.opentelemetry.io/otel/trace"

)

// Email counter metric

var emailCounter = promauto.NewCounter(prometheus.CounterOpts{

Name: "email_counter",

Help: "The total number of email sent",

})

// Shutdown handler is responsible for finishing trace.

type ShutdownHandler func(context.Context) error

// Regular email data

type Email struct {

From string `json:"from"`

To string `json:"to"`

Subject string `json:"subject"`

Body string `json:"body"`

}

// Regular customer

type Customer struct {

Id string // the customer unique id

Name string // the customer name

Document string // the document number

Email string // the customer email

}

// This function is responsible for setting up the program before it runs

func init() {

gofakeit.Seed(0)

}

// Build redis client connection

func setupRedis() *redis.Client {

rdb := redis.NewClient(&redis.Options{

Addr: os.Getenv("REDIS_URL"),

Password: "",

DB: 0,

})

if err := redisotel.InstrumentTracing(rdb); err != nil {

panic(err)

}

if err := redisotel.InstrumentMetrics(rdb); err != nil {

panic(err)

}

return rdb

}

// Initializes the open telemetry tracer.

func setupTracer(ctx context.Context) (ShutdownHandler, error) {

exporter, err := otlptracegrpc.New(ctx)

if err != nil {

return nil, err

}

tp := buildTracer(ctx, exporter)

otel.SetTracerProvider(tp)

return tp.Shutdown, nil

}

// Build an open telemetry tracer

func buildTracer(ctx context.Context, exporter *otlptrace.Exporter) *sdktrace.TracerProvider {

res, err := resource.New(ctx,

resource.WithAttributes(

attribute.String("service.name", os.Getenv("OTEL_SERVICE_NAME")),

),

)

if err != nil {

panic(err)

}

return sdktrace.NewTracerProvider(

sdktrace.WithBatcher(exporter),

sdktrace.WithResource(res),

)

}

// Responsible for finalizing trace context.

func doShutdown(ctx context.Context, shutdown ShutdownHandler) {

func() {

if err := shutdown(ctx); err != nil {

log.Fatalf("failed to shut down tracer: %v", err)

}

}()

}

// Add span attributes values

func setupSpanValues(span trace.Span) {

span.SetAttributes(

attribute.String("customer.id", gofakeit.UUID()),

attribute.String("customer.email", gofakeit.Email()),

attribute.String("customer.password", gofakeit.Password(true, true, true, true, true, 10)),

attribute.String("customer.vatnumber", gofakeit.SSN()),

attribute.String("customer.credit_card", gofakeit.CreditCard().Number),

attribute.String("db.user", gofakeit.Username()),

attribute.String("db.password", gofakeit.Password(true, true, true, true, true, 10)),

attribute.String("account.email", gofakeit.Email()),

)

}

// Returns an internal server error

func writeHttpError(span trace.Span, w http.ResponseWriter, errorMessage string) {

span.AddEvent("error",

trace.WithAttributes(

attribute.String("value", errorMessage),

),

)

span.End()

w.WriteHeader(http.StatusInternalServerError)

w.Write([]byte(errorMessage))

}

// Route to generate stats for every request.

func sendEmailRoute(rdb *redis.Client) func(w http.ResponseWriter, r *http.Request) {

return func(w http.ResponseWriter, r *http.Request) {

tracer := otel.Tracer("go-tracer")

_, span := tracer.Start(r.Context(), "send-email")

message, err := gofakeit.EmailText(&gofakeit.EmailOptions{})

if err != nil {

writeHttpError(span, w, fmt.Sprintf("failed to fetch random message: %v", err))

return

}

customer := Customer{

Id: gofakeit.UUID(),

Name: gofakeit.Name(),

Email: gofakeit.Email(),

Document: gofakeit.SSN(),

}

email := Email{

From: fmt.Sprintf("no-reply@%v", gofakeit.DomainName()),

To: customer.Email,

Subject: gofakeit.BookTitle(),

Body: message,

}

span.SetAttributes(

attribute.String("customer.id", customer.Id),

attribute.String("customer.email", customer.Email),

attribute.String("customer.document", customer.Document),

)

setupSpanValues(span)

jsonEmail, err := json.Marshal(email)

if err != nil {

writeHttpError(span, w, fmt.Sprintf("failed to parse email message: %v", err))

return

}

err = rdb.SPublish(r.Context(), "email", jsonEmail).Err()

if err != nil {

writeHttpError(span, w, fmt.Sprintf("failed to queue email message: %v", err))

return

}

span.AddEvent("email",

trace.WithAttributes(

attribute.String("subject", email.Subject),

attribute.String("content", email.Body),

),

)

emailCounter.Inc()

response := fmt.Sprintf("📨 The email was queued successfully: %v", email.Subject)

span.AddEvent("log-message", trace.WithAttributes(attribute.String("message", message)))

log.Println(message)

span.End()

w.WriteHeader(http.StatusCreated)

w.Write([]byte(response))

}

}

func main() {

rdb := setupRedis()

ctx := context.Background()

shutdown, err := setupTracer(ctx)

if err != nil {

log.Fatalf("failed to initialize open telemetry tracer: %v", err)

}

defer doShutdown(ctx, shutdown)

otelHandler := otelhttp.NewHandler(http.HandlerFunc(sendEmailRoute(rdb)), "SendEmail")

http.Handle("/metrics", promhttp.Handler())

http.Handle("/send-email", otelHandler)

http.ListenAndServe(":8001", nil)

}

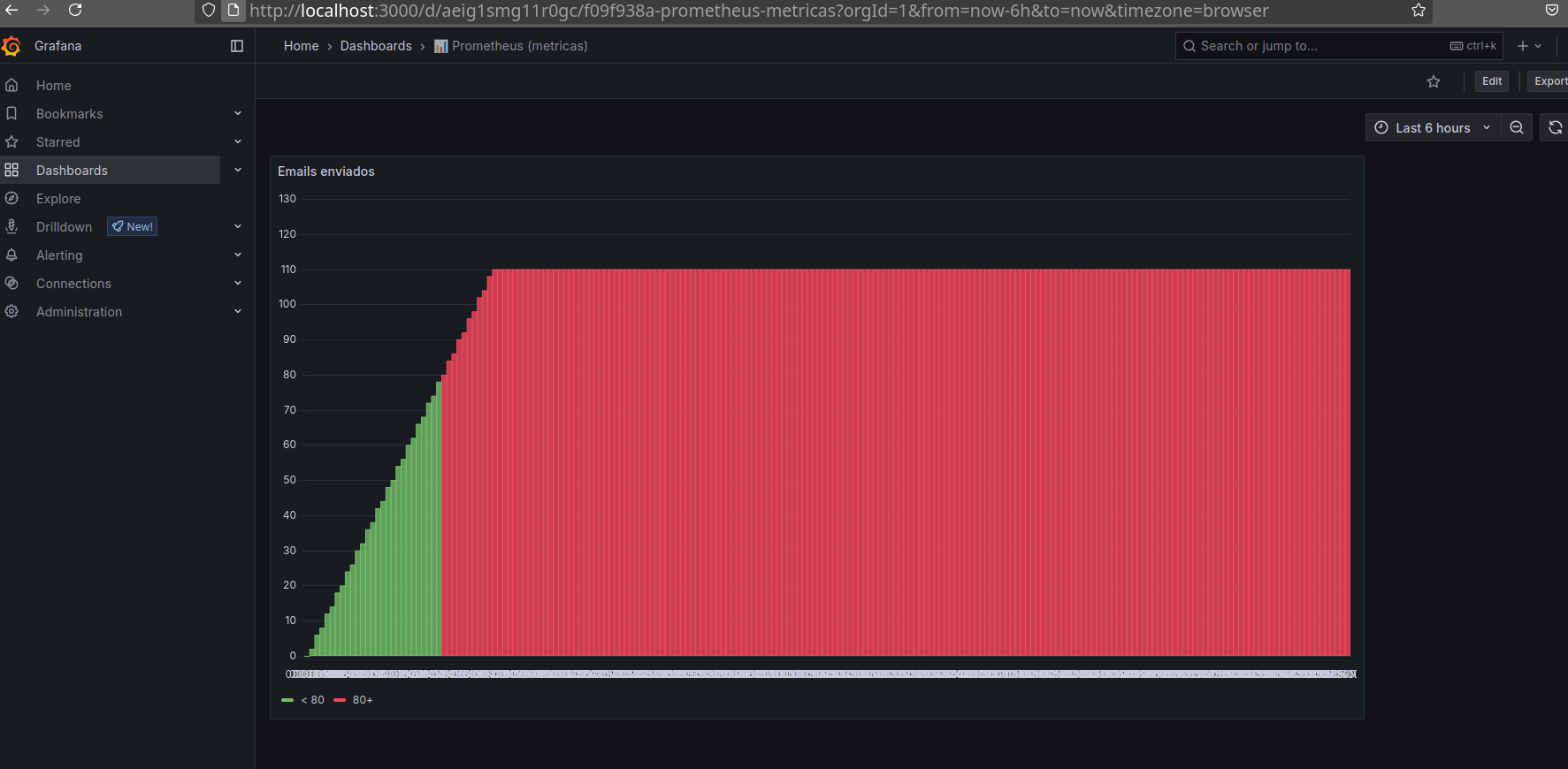

Abaixo temos a implementação de uma métrica do tipo contador, responsável por registrar o total de e-mails enviados. Esse tipo de dado pode ser utilizado para gerar gráficos, indicar o funcionamento da aplicação e até mesmo disparar alertas em situações anômalas.

No caso específico da aplicação escrita em Go, optei por utilizar o Prometheus para expor as métricas em vez do próprio OpenTelemetry. Isso porque, durante os testes, apenas a aplicação em Go apresentou dificuldades ao despachar métricas diretamente via OTel.

Felizmente, o Prometheus é totalmente compatível com o OpenTelemetry Collector, o que nos permite integrá-lo de forma transparente ao pipeline de observabilidade.

var emailCounter = promauto.NewCounter(prometheus.CounterOpts{

Name: "email_counter",

Help: "The total number of email sent",

})

http.Handle("/metrics", promhttp.Handler())

http.ListenAndServe(":8001", nil)Aqui estamos enviando por meio do tracer um span (send-email), que representa uma ação ou operação dentro do sistema. Esse rastro pode ser visualizado nas ferramentas de observabilidade para ajudar a entender o comportamento da aplicação em tempo real.

tracer := otel.Tracer("go-tracer")

_, span := tracer.Start(r.Context(), "send-email")A integração de logs no Go funciona principalmente através de Events adicionados aos spans. No entanto, a integração direta com loggers ainda precisa ser melhor explorada.

Como o ⚠️ SDK do OpenTelemetry para Go ainda está em desenvolvimento ativo, algumas dessas funcionalidades não estão totalmente integradas ou exigem soluções manuais.

span.AddEvent("email",

trace.WithAttributes(

attribute.String("subject", email.Subject),

attribute.String("content", email.Body),

),

)

span.AddEvent("error",

trace.WithAttributes(

attribute.String("value", errorMessage),

),

)

span.End()Outras instrumentações, como mencionamos anteriormente, são mais simples de configurar. Você pode conferir os detalhes e exemplos completos diretamente no repositório no GitHub.

Como testar?

Preparei um script em Bash (scripts/do-requests.sh) que será responsável por executar múltiplas requisições nas aplicações, com o objetivo de gerar logs, métricas e traces que alimentarão nosso pipeline de observabilidade.

Antes de executar o script, é importante garantir que todos os containers estejam em funcionamento. Para isso, utilize o seguinte comando:

docker compose --profile all up -dEsse comando irá iniciar todos os serviços definidos no docker-compose.yml em segundo plano. Assim que os containers estiverem ativos, podemos prosseguir com a execução do script de carga para simular o comportamento das aplicações.

Com todos os containers em execução, estamos prontos para gerar os insumos de observabilidade — ou seja, os logs, métricas e traces que serão processados pelo nosso coletor.

Execute o comando abaixo para iniciar o script de carga:

bash scripts/do-requests.sh

Após a execução, já teremos dados disponíveis para visualização direta no Grafana e no Jaeger, que foram definidos como nossos exporters.

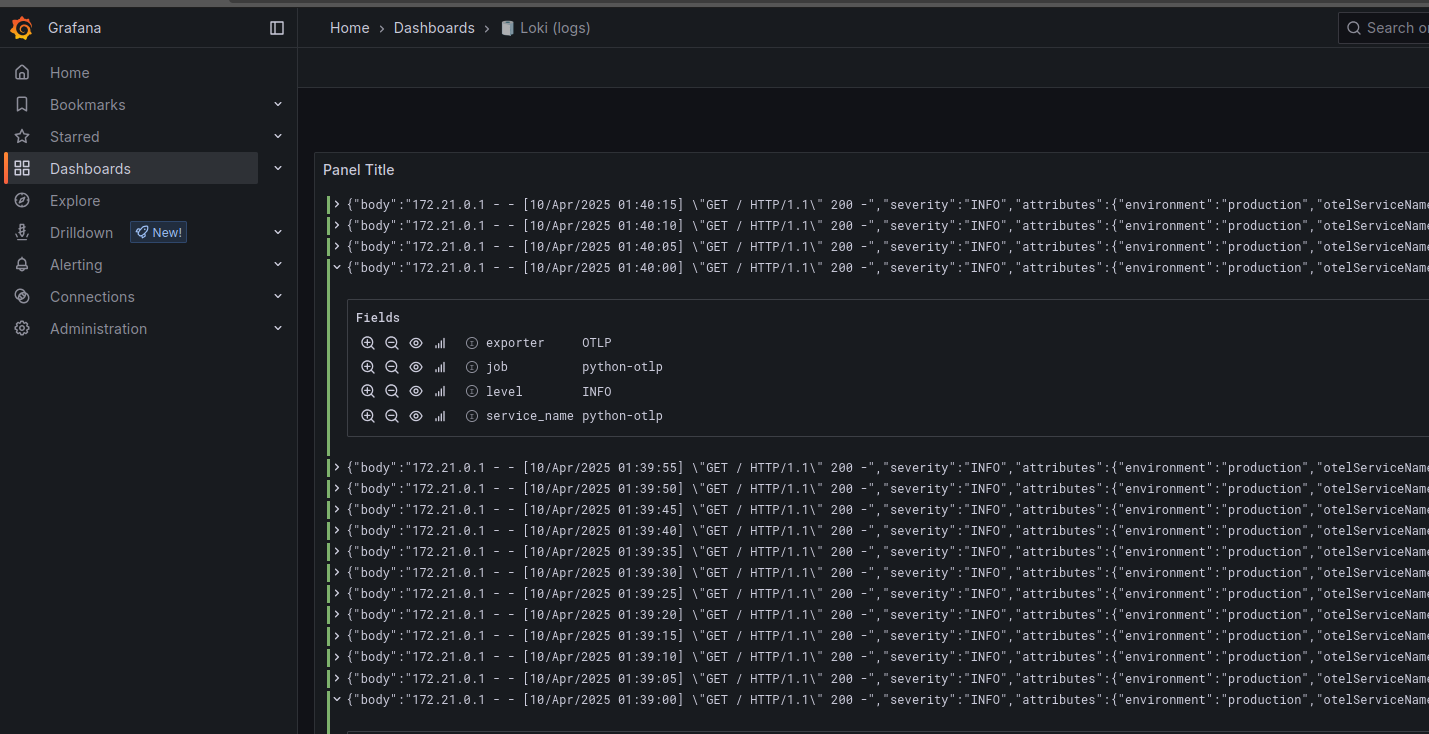

Grafana

O Grafana tem como foco oferecer uma experiência completa em observabilidade. Além de visualizar logs, traces e métricas, ele permite criar dashboards personalizadas e configurar alertas inteligentes, proporcionando uma visão centralizada e em tempo real do comportamento dos sistemas.

No detalhe 🔍

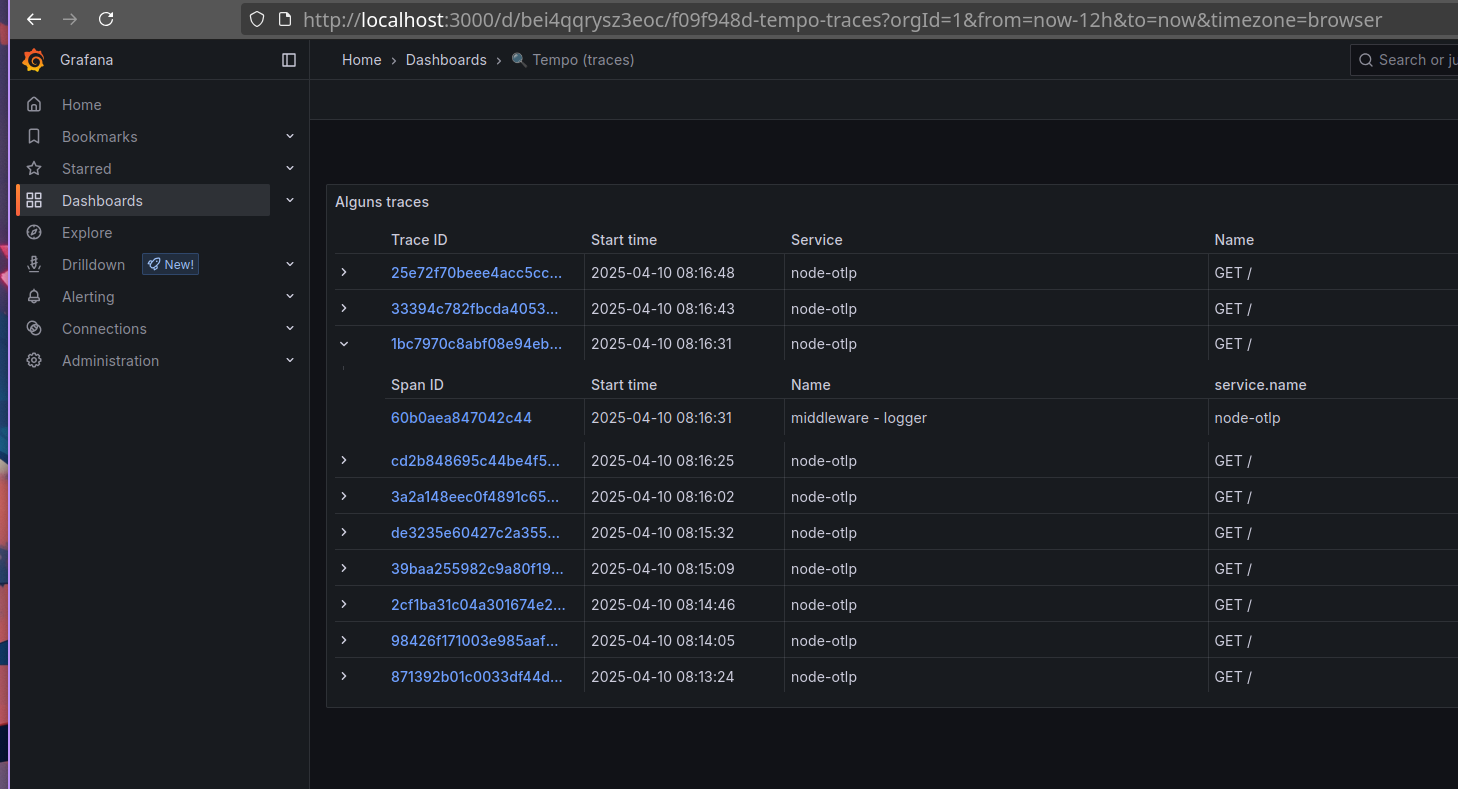

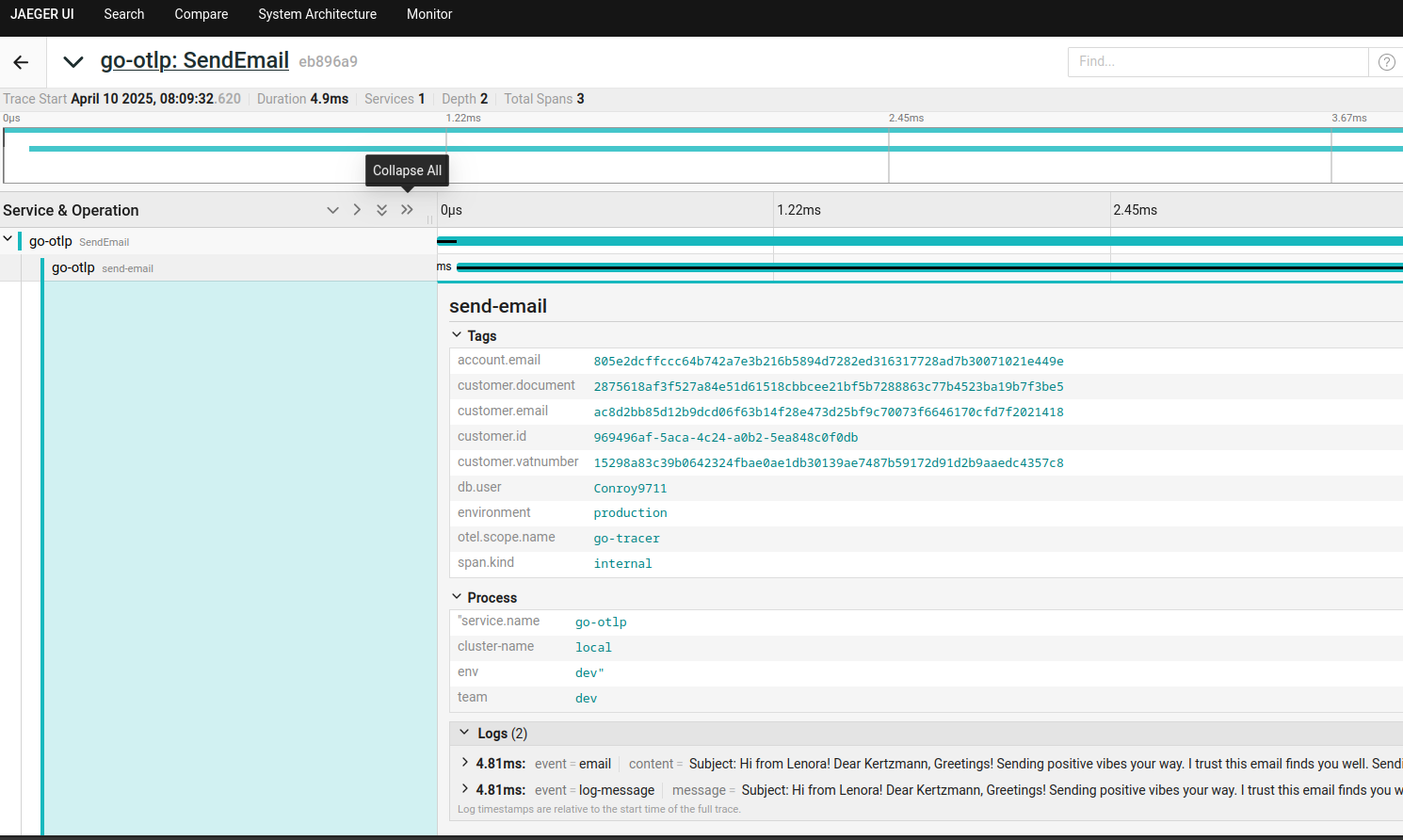

Jaeger

Com o Jaeger, é possível observar traces, métricas e logs de forma minimalista e objetiva. Ele é extremamente útil para identificar gargalos e entender o fluxo de requisições dentro da aplicação, especialmente em ambientes com microsserviços. Além disso, o Jaeger permite importar traces em formato JSON, facilitando análises manuais ou integrações com outras ferramentas.

No detalhe 🔍

Conclusão

Neste artigo, criamos um exemplo prático de integração de aplicações em diferentes linguagens com o OpenTelemetry. Exploramos as principais configurações do repositório e vimos como os dados de observabilidade são exibidos no Jaeger e no Grafana.

Espero que essa introdução ao OpenTelemetry ajude a aumentar a visibilidade dos seus projetos e torne sua vida de desenvolvedor mais tranquila — e com menos emoções indesejadas.

E por hoje é isso!

Que Deus 🕊️ te abençoe, e não se esqueça: mantenha seu kernel 🧠 sempre atualizado!

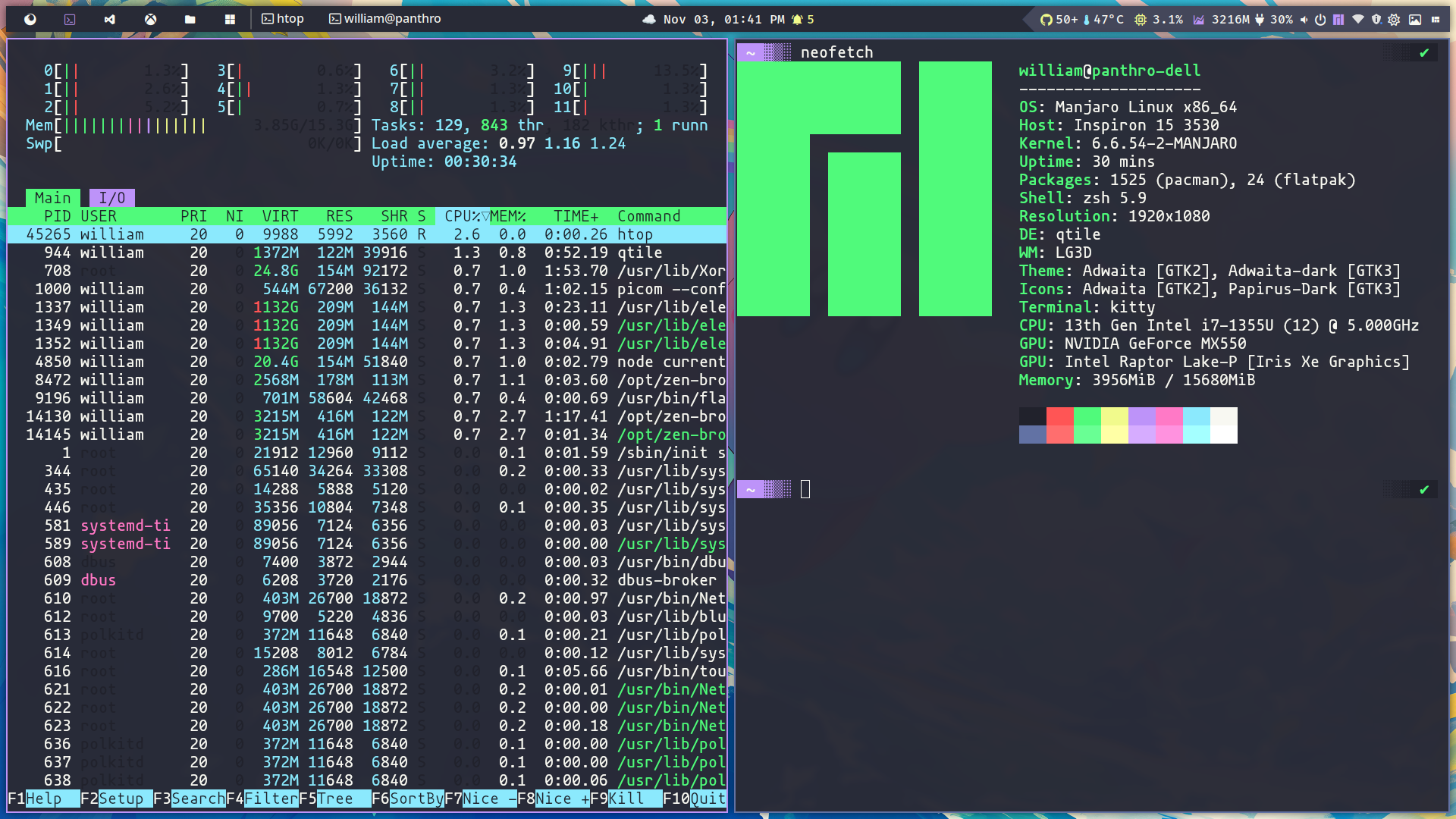

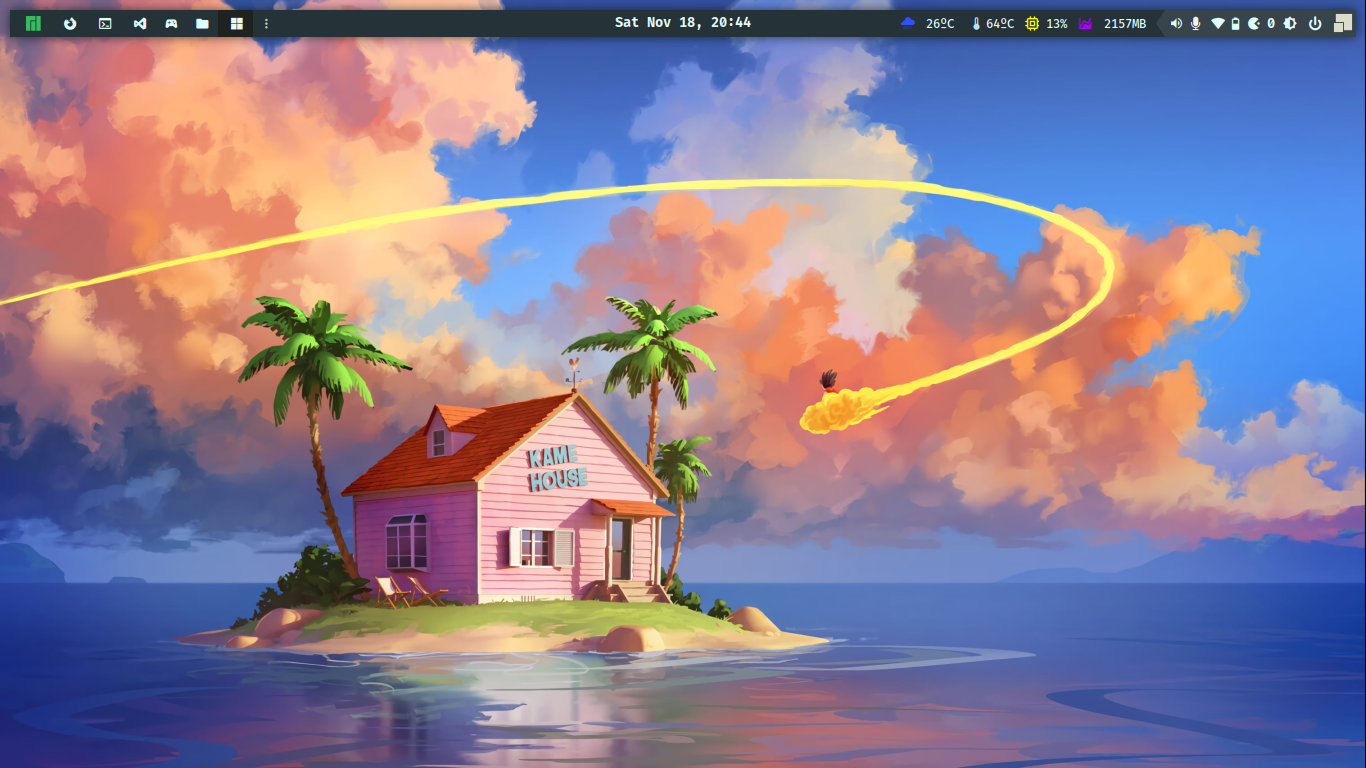

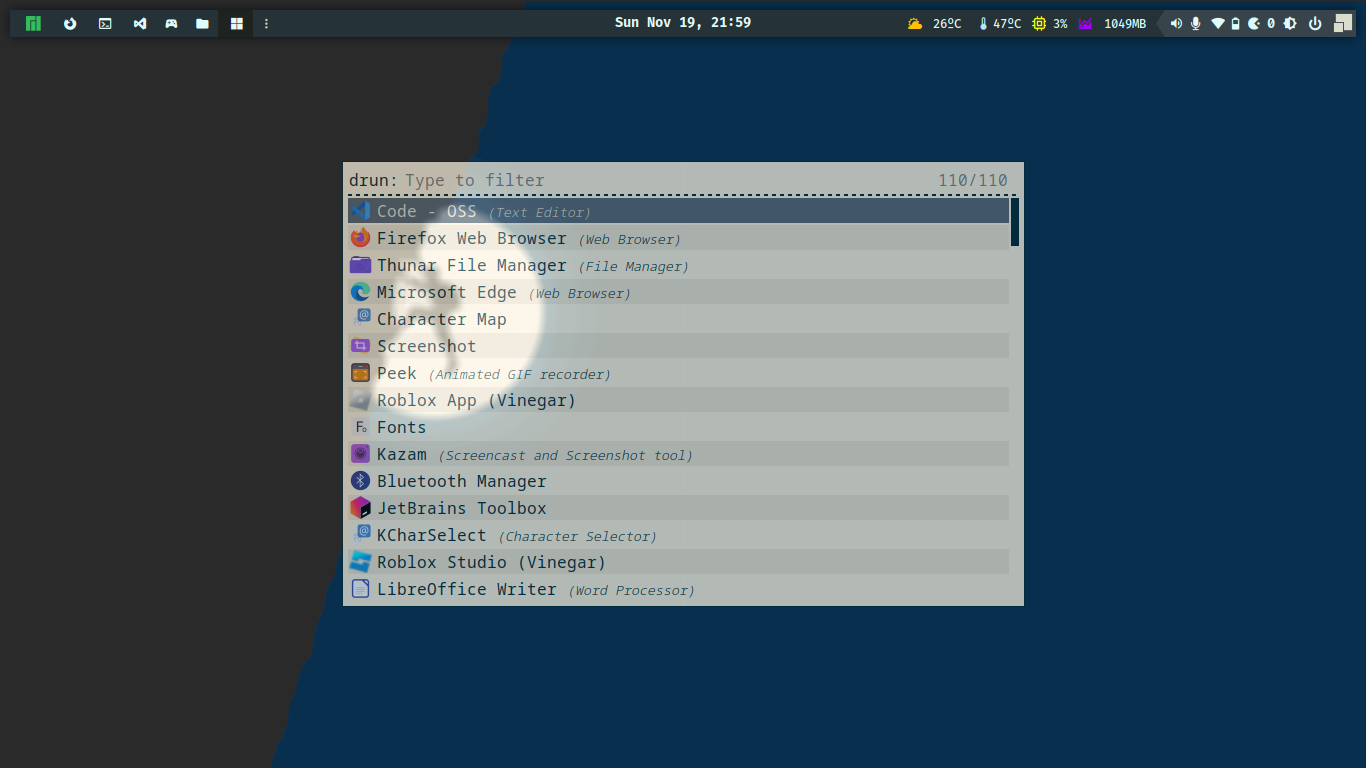

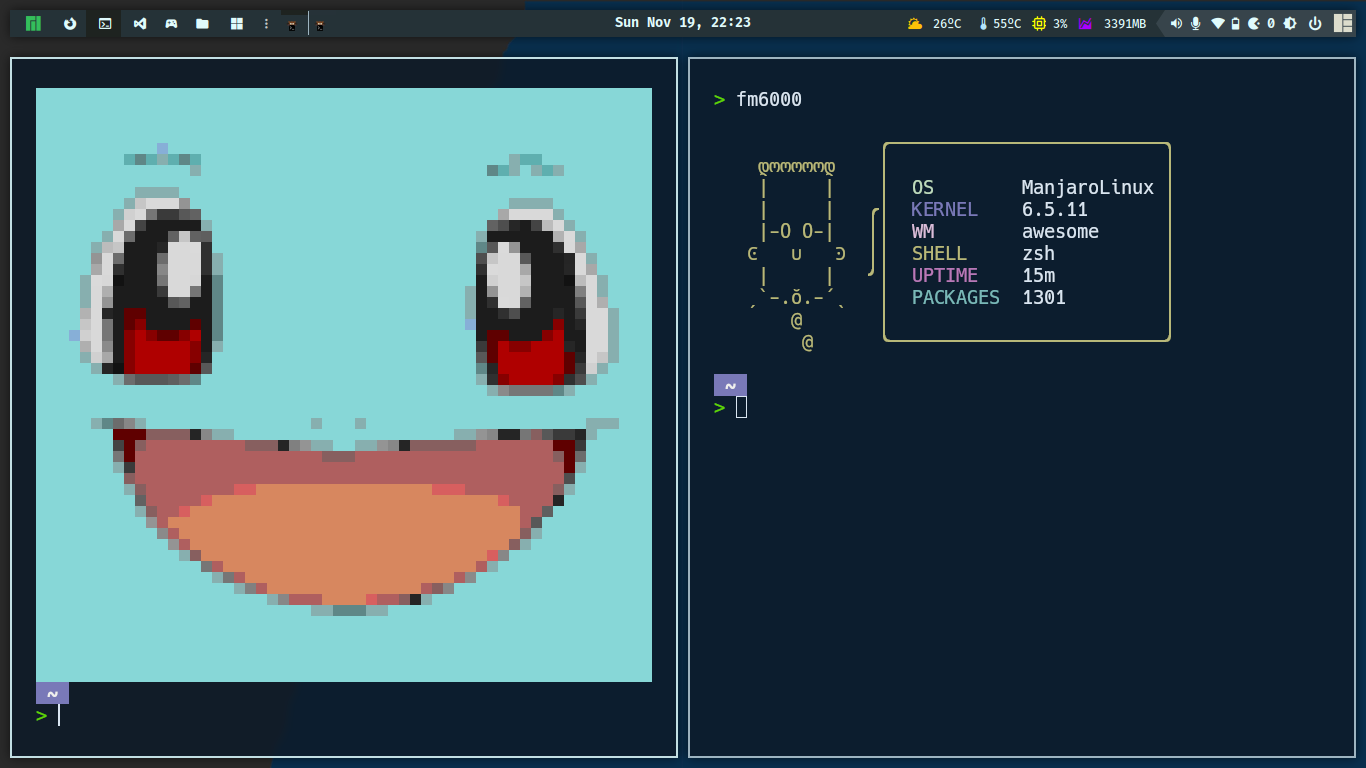

]]>A few months ago, I got back with a new experience, this time Qtile. A friend told me about this Window Manager because I was doing some things in Python at the time, but I decided to test other things in my mind, so I was using AwesomeWM, another wonder tiling Manager, in which I spent a lot of time customizing things and making dotfiles more usable and available at my github repository. This time, I did the same thing, but got deeper than Awesome WM, bringing desktop tastes inside Window Manager.

Desktop tastes 😋?

It may seem a bit unusual, but I truly appreciate the dual aspects of simplicity in configuring your graphical environment and the capability to enhance and personalize the experience, making it all unique and highly productive.

This piece is not about why I chose Qtile over Awesome WM, Hyprland, I3, and other available solutions, but I will give my reasons for doing so right now:

- I'm afraid and thinking about Wayland, because some distros were adopting Wayland instead of X11, so I thought, humm..., maybe in the next years I could have some problems, and I looked at Hyprland, but I didn't have a perfect experience with Wayland at the time, so I decided to be in the middle because Qtile works with both X11 and Wayland, despite the fact that I'm still using X11 😆.

- Lua looks very simple, and I enjoy it, but so is Python, and there is a large list of packages that we can use with 🐧Linux compatibility.

- Another challenge is that setting up Qtile is much easier than setting up Awesome WM, but I don't have all of the powers that I had in Awesome WM; for example, dropdown menus perform well in Awesome WM, but with Qtile I decided to utilize Rofi scripts because things didn't work properly.

That's my viewpoint. I still have Awesome WM, Qtile, and other Window Managers installed and working on my personal laptop because I enjoy them, and when you use Window Manager and customize things, you should be prepared for some crashes; even though I have versioned my dotfile in the github repository, incompatibilities can occur at any time.

Awesome WM lover ❤️?

After reading my piece, you should check out this article that shares my experience with dotfiles. 😁

First of all, what is Qtile?

Qtile is a dynamic tiling window manager for X11 and Wayland that was written in Python. It's very customisable, with users able to set layouts, keybindings, and widgets via a simple Python configuration file. Designed for power users, Qtile offers a mix between automation and flexibility, making it an excellent solution for those desiring a personalized and efficient workflow.

What is Qtile Ebenezer?

I started customizing Qtile using just dotfiles, but then I thought: Why shouldn't others be able to reuse the widgets I built? Just like I did in AwesomeWM with Lain and Awesome Buttons, I wanted to create something reusable.

So, I built a PyPI library and an AUR package. Why both?

- PyPI: Serves as a library for testing and checking Qtile configurations.

- AUR: Installing system-wide Python packages via pip can break your system, which is why an AUR package is necessary. You’ve probably seen the warning when trying to install Python packages globally with

pip.

Ebenezer 🪨

This library was named Ebenezer 🪨, which meaning "stone of helper.".

The quote is from I Samuel 7. After defeating the Philistines, Samuel raises his Ebenezer, declaring that God defeated the enemies on this spot. As a result, "hither by thy help I come." So I hope this stone helps you in your environment and, more importantly, in your life. 🙏🏿

The config.py file is where magic ✨ happens...

This is the entry point where we configure all window behaviours such as shortcuts, how many desktops we want, whether we want a top, bottom, or left bar, the startup process, and predefined configurations for specific windows or desktops, so as with any window manager, you can customize your environment to be as you want and, most importantly, productive.

Following we have a default Qtile configuration file:

# Copyright (c) 2010 Aldo Cortesi

# Copyright (c) 2010, 2014 dequis

# Copyright (c) 2012 Randall Ma

# Copyright (c) 2012-2014 Tycho Andersen

# Copyright (c) 2012 Craig Barnes

# Copyright (c) 2013 horsik

# Copyright (c) 2013 Tao Sauvage

#

# Permission is hereby granted, free of charge, to any person obtaining a copy

# of this software and associated documentation files (the "Software"), to deal

# in the Software without restriction, including without limitation the rights

# to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

# copies of the Software, and to permit persons to whom the Software is

# furnished to do so, subject to the following conditions:

#

# The above copyright notice and this permission notice shall be included in

# all copies or substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

# AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

# OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

# SOFTWARE.

from libqtile import bar, layout, qtile, widget

from libqtile.config import Click, Drag, Group, Key, Match, Screen

from libqtile.lazy import lazy

from libqtile.utils import guess_terminal

mod = "mod4"

terminal = guess_terminal()

keys = [

# A list of available commands that can be bound to keys can be found

# at https://docs.qtile.org/en/latest/manual/config/lazy.html

# Switch between windows

Key([mod], "h", lazy.layout.left(), desc="Move focus to left"),

Key([mod], "l", lazy.layout.right(), desc="Move focus to right"),

Key([mod], "j", lazy.layout.down(), desc="Move focus down"),

Key([mod], "k", lazy.layout.up(), desc="Move focus up"),

Key([mod], "space", lazy.layout.next(), desc="Move window focus to other window"),

# Move windows between left/right columns or move up/down in current stack.

# Moving out of range in Columns layout will create new column.

Key(

[mod, "shift"], "h", lazy.layout.shuffle_left(), desc="Move window to the left"

),

Key(

[mod, "shift"],

"l",

lazy.layout.shuffle_right(),

desc="Move window to the right",

),

Key([mod, "shift"], "j", lazy.layout.shuffle_down(), desc="Move window down"),

Key([mod, "shift"], "k", lazy.layout.shuffle_up(), desc="Move window up"),

# Grow windows. If current window is on the edge of screen and direction

# will be to screen edge - window would shrink.

Key([mod, "control"], "h", lazy.layout.grow_left(), desc="Grow window to the left"),

Key(

[mod, "control"], "l", lazy.layout.grow_right(), desc="Grow window to the right"

),

Key([mod, "control"], "j", lazy.layout.grow_down(), desc="Grow window down"),

Key([mod, "control"], "k", lazy.layout.grow_up(), desc="Grow window up"),

Key([mod], "n", lazy.layout.normalize(), desc="Reset all window sizes"),

# Toggle between split and unsplit sides of stack.

# Split = all windows displayed

# Unsplit = 1 window displayed, like Max layout, but still with

# multiple stack panes

Key(

[mod, "shift"],

"Return",

lazy.layout.toggle_split(),

desc="Toggle between split and unsplit sides of stack",

),

Key([mod], "Return", lazy.spawn(terminal), desc="Launch terminal"),

# Toggle between different layouts as defined below

Key([mod], "Tab", lazy.next_layout(), desc="Toggle between layouts"),

Key([mod], "w", lazy.window.kill(), desc="Kill focused window"),

Key(

[mod],

"f",

lazy.window.toggle_fullscreen(),

desc="Toggle fullscreen on the focused window",

),

Key(

[mod],

"t",

lazy.window.toggle_floating(),

desc="Toggle floating on the focused window",

),

Key([mod, "control"], "r", lazy.reload_config(), desc="Reload the config"),

Key([mod, "control"], "q", lazy.shutdown(), desc="Shutdown Qtile"),

Key([mod], "r", lazy.spawncmd(), desc="Spawn a command using a prompt widget"),

]

# Add key bindings to switch VTs in Wayland.

# We can't check qtile.core.name in default config as it is loaded before qtile is started

# We therefore defer the check until the key binding is run by using .when(func=...)

for vt in range(1, 8):

keys.append(

Key(

["control", "mod1"],

f"f{vt}",

lazy.core.change_vt(vt).when(func=lambda: qtile.core.name == "wayland"),

desc=f"Switch to VT{vt}",

)

)

groups = [Group(i) for i in "123456789"]

for i in groups:

keys.extend(

[

# mod + group number = switch to group

Key(

[mod],

i.name,

lazy.group[i.name].toscreen(),

desc="Switch to group {}".format(i.name),

),

# mod + shift + group number = switch to & move focused window to group

Key(

[mod, "shift"],

i.name,

lazy.window.togroup(i.name, switch_group=True),

desc="Switch to & move focused window to group {}".format(i.name),

),

# Or, use below if you prefer not to switch to that group.

# # mod + shift + group number = move focused window to group

# Key([mod, "shift"], i.name, lazy.window.togroup(i.name),

# desc="move focused window to group {}".format(i.name)),

]

)

layouts = [

layout.Columns(border_focus_stack=["#d75f5f", "#8f3d3d"], border_width=4),

layout.Max(),

# Try more layouts by unleashing below layouts.

# layout.Stack(num_stacks=2),

# layout.Bsp(),

# layout.Matrix(),

# layout.MonadTall(),

# layout.MonadWide(),

# layout.RatioTile(),

# layout.Tile(),

# layout.TreeTab(),

# layout.VerticalTile(),

# layout.Zoomy(),

]

widget_defaults = dict(

font="sans",

fontsize=12,

padding=3,

)

extension_defaults = widget_defaults.copy()

screens = [

Screen(

bottom=bar.Bar(

[

widget.CurrentLayout(),

widget.GroupBox(),

widget.Prompt(),

widget.WindowName(),

widget.Chord(

chords_colors={

"launch": ("#ff0000", "#ffffff"),

},

name_transform=lambda name: name.upper(),

),

widget.TextBox("default config", name="default"),

widget.TextBox("Press <M-r> to spawn", foreground="#d75f5f"),

# NB Systray is incompatible with Wayland, consider using StatusNotifier instead

# widget.StatusNotifier(),

widget.Systray(),

widget.Clock(format="%Y-%m-%d %a %I:%M %p"),

widget.QuickExit(),

],

24,

# border_width=[2, 0, 2, 0], # Draw top and bottom borders

# border_color=["ff00ff", "000000", "ff00ff", "000000"] # Borders are magenta

),

# You can uncomment this variable if you see that on X11 floating resize/moving is laggy

# By default we handle these events delayed to already improve performance, however your system might still be struggling

# This variable is set to None (no cap) by default, but you can set it to 60 to indicate that you limit it to 60 events per second

# x11_drag_polling_rate = 60,

),

]

# Drag floating layouts.

mouse = [

Drag(

[mod],

"Button1",

lazy.window.set_position_floating(),

start=lazy.window.get_position(),

),

Drag(

[mod], "Button3", lazy.window.set_size_floating(), start=lazy.window.get_size()

),

Click([mod], "Button2", lazy.window.bring_to_front()),

]

dgroups_key_binder = None

dgroups_app_rules = [] # type: list

follow_mouse_focus = True

bring_front_click = False

floats_kept_above = True

cursor_warp = False

floating_layout = layout.Floating(

float_rules=[

# Run the utility of `xprop` to see the wm class and name of an X client.

*layout.Floating.default_float_rules,

Match(wm_class="confirmreset"), # gitk

Match(wm_class="makebranch"), # gitk

Match(wm_class="maketag"), # gitk

Match(wm_class="ssh-askpass"), # ssh-askpass

Match(title="branchdialog"), # gitk

Match(title="pinentry"), # GPG key password entry

]

)

auto_fullscreen = True

focus_on_window_activation = "smart"

reconfigure_screens = True

# If things like steam games want to auto-minimize themselves when losing

# focus, should we respect this or not?

auto_minimize = True

# When using the Wayland backend, this can be used to configure input devices.

wl_input_rules = None

# xcursor theme (string or None) and size (integer) for Wayland backend

wl_xcursor_theme = None

wl_xcursor_size = 24

# XXX: Gasp! We're lying here. In fact, nobody really uses or cares about this

# string besides java UI toolkits; you can see several discussions on the

# mailing lists, GitHub issues, and other WM documentation that suggest setting

# this string if your java app doesn't work correctly. We may as well just lie

# and say that we're a working one by default.

#

# We choose LG3D to maximize irony: it is a 3D non-reparenting WM written in

# java that happens to be on java's whitelist.

wmname = "LG3D"

As I said, you can do whatever you want, but remember not to crash your desktop...

What did I change in the Qtile setup?

I looked at Python and decided to try something at AwesomeWM; why not use configuration files that can be changed instead of a Python script? So I initially built the INI in the same way that I did in Lua, but I decided to switch to yaml format because it is more versatile and easier to translate to objects, so following file describe all desktop behaviours:

Environment

You can create your preferences here, such as terminal, web browser, background settings, logo, and api settings for integrations like Weather API or GitHub

environment:

modkey: mod4

terminal: alacritty

browser: firefox

wallpaper_dir: /usr/share/backgrounds/archlinux/

wallpaper_timeout: 30

os_logo: /home/qtileuser/logos/linux.svg

theme: ebenezer

os_logo_icon:

os_logo_icon_color: "fg_white"

weather_api_key: foo

city_id: 1

github_notifications_token: foo

Groups and layouts

Here you can decide how many desktops you wish to use. Qtile organizes desktops into groups, so you can have one for terminal, browser, gaming, editor, and whatever else you want, see the documentation for more information

groups:

browsers:

terminal:

editors:

games:

files:

win:

groups_layout:

default: monadtall

win: tile

files: floating

editors: monadtall

games: max

layouts:

bsp: {}

columns: {}

floating: {}

# matrix: {}

max: {}

monadtall: {}

monadwide: {}

# radiotile: {}

tile:

ratio: 0.335

margin: 0

treetab: {}

# verticaltile: {}

# zoomy: {}

Terminal

The ALT+ENTER shortcut will open a new terminal; by default, this group uses the MonadTall layout; as you can see, this setting is available in config.yaml, and you may alter it to any layout supported by Qtile.

Startup

This section covers all you need to get started with Qtile; sometimes you should create a startup process specific to the Qtile context, for instance picom or lock screen.

startup:

keyboard_layout: setxkbmap -model abnt2 -layout br && localectl set-x11-keymap br

polkit: /usr/lib/polkit-gnome/polkit-gnome-authentication-agent-1 &

picom: picom --config $home/.config/picom/picom.conf --daemon

nm_applet: nm-applet &

lock_screen: xautolock -time 10 -locker "ebenezer ui lock" &

wallpaper_slideshow: ebenezer wallpaper random $wallpaper_dir --timeout $wallpaper_timeout

dunst: dunst &

pcmanfm: pcmanfm-qt --daemon-mode &

- setxkbmap and localectl set-x11-keymap the commands set the keyboard layout to Brazilian (BR) on a Linux system, first for the current section and then for X11 and terminals.

- polkit (PolicyKit) is a Linux framework for managing privileged operations, enabling non-root users to execute specified system functions securely without full

sudoaccess. It enhances security and usability by providing fine-grained access management to system services and GUI apps. - picom is a lightweight X11 compositor for Linux, enhancing visual effects like transparency, shadows, and fading animations. It's often used with tiling window managers like Qtile to improve aesthetics and reduce screen tearing.

- xautolock is a lightweight utility that automatically locks the X session after a period of inactivity.

- nm-applet is a graphical interface for NetworkManager that lets users manage network connections (Wi-Fi, Ethernet, VPN, and so on) via a system tray icon.

- dusnt is a lightweight, highly customizable notification daemon for Linux. It displays notifications in a small, unobtrusive pop-up format.

- pcmanfm is a lightweight, fast file manager for Linux, designed to be simple yet functional. It provides essential features like tabbed browsing, drag-and-drop support, and integrates well with minimal desktop environments, offering a clean interface and efficient file management.

- wallpaper_slideshow, as you can see, I created a widget package with CLI support called ebenezer that allows you to establish a procedure for changing your desktop wallpaper.

Commands

This section describes custom commands, which can be used as shortcut keys or to override commands in integrated features like as the lock screen or wallpaper.

commands:

screenshot: flameshot gui --clipboard --path $home/Pictures/Screenshots

screenshot_full: flameshot full --clipboard --path $home/Pictures/Screenshots

change_wallpaper: ebenezer wallpaper set /usr/share/backgrounds/archlinux/

mixer: pavucontrol # another option: kitty "pulsemixer"

powermenu: ebenezer ui powermenu

wallpaper_menu: ebenezer ui wallpaper-menu

open_url: zen-browser --new-tab $url

launcher: rofi -show drun -show-icons -theme $rofi_home/launcher.rasi

launcher_windows: rofi -show window -show-icons -theme $rofi_home/launcher.rasi

desktop_settings: ebenezer ui settings

Floating

Specifies which windows, identified by their class name or title, should automatically follow floating behavior, meaning they are not tiled but remain freely movable and resizable. This is particularly useful for dialog windows, pop-ups, and applications that don't work well in a tiling setting.

floating:

wm_class:

- pavucontrol

- Arandr

- Blueman-manager

- Gpick

- Kruler

- Sxiv

- Tor Browser

- Wpa_gui

- veromix

- xtightvncviewer

- gnome-calculator

- ebenezer - configuration manager

- "!floatingwindow"

- Toplevel

- kdenlive

title:

- ebenezer - configuration manager

Fonts

Define the default fonts for bars and widgets based on context. Qtile, GTK, and QT use owl files to define styles; see sections ./gtk-4.0,./gtk-3.0,./qt5ct, and ./qt6ct in my dotfiles repository.

fonts:

font: Fira Code Nerd Font Bold

font_regular: Fira Code Nerd Font Medium

font_light: Fira Code Nerd Font Light

font_strong: Fira Code Nerd Font Semibold

font_strong_bold: Fira Code Nerd Font Bold

font_size: 14

font_icon: Fira Code Nerd Font Medium

font_icon_size: 16

font_arrow: Fira Code Nerd Font Medium

font_arrow_size: 30

🚨 You must use Nerd Fonts, a typeface with patches designed for developers and featuring a large variety of glyphs (icons). Specifically, to include a large number of additional glyphs from popular 'iconic typefaces' like Font Awesome, Devicons, Octicons, and others.

Keybindings

This section details all shortcut keys or keybindings to launch a terminal, open a menu, alter window dimensions, change layout, take screenshots, use tools, or triggers anything.

The keybind could be the following actions list:

- terminal: Launches your preferred terminal, as defined in the environment section.

- spawn_command: Runs a custom command or a predefined one from the commands section.

- browser: Opens your preferred browser, as defined in the environment section.

- lock_screen: Locks the screen. By default, this uses the ebenezer ui lock command, which is based on a customized i3-lock. You can override this behavior in lock_screen.command.

- reload_config: Reloads the Qtile configuration.

- shutdown: Closes the Qtile session and returns to the session manager (e.g., LightDM, XDM, SDDM, GDM).

- next_layout: Switches to the next window layout based on the order defined in the layouts section.

- kill_window: Closes the currently active window. Since Qtile does not handle windows like GNOME or AwesomeWM, this command is required to close a window—expected behavior in a window manager.

- focus_(left|right|down|up): Moves the focus to the next window in the specified direction.

- fullscreen: Toggles full-screen mode for the active window.

- floating: Toggles floating mode for the active window.

- shuffle_(left|right|up|down): Moves the window in the specified direction.

- grow_(left|right|up|down): Increases the window size in the specified direction.

- reset_windows: Resets window sizes to their default layout dimensions.

- dropdown: Spawns a window as a drop-down, as defined in the scratchpads section.

keybindings:

- {name: Launch terminal, keys: $mod Return, action: terminal}

- {name: Launcher, keys: $mod shift Return, action: spawn_command, command: launcher}

- {name: Launch Window, keys: $mod control Tab, action: spawn_command, command: launcher_windows}

- {name: Web browser, keys: $mod b, action: browser}

- {name: Lock Screen, keys: $mod control x, action: lock_screen}

# qtile keys

- {name: Reload the config, keys: $mod shift r, action: reload_config}

- {name: Shutdown Qtile, keys: $mod control q, action: shutdown}

# window key

- {name: Toggle between layouts, keys: $mod Tab, action: next_layout}

- {name: Kill focused window, keys: $mod shift c, action: kill_window}

- {name: Move focus to left, keys: $mod h, action: focus_left}

- {name: Move focus to right, keys: $mod l, action: focus_right}

- {name: Move focus down, keys: $mod j, action: focus_down}

- {name: Move focus up, keys: $mod k, action: focus_up}

- {name: Move window focus to other window, keys: $mod space, action: focus_next}

- {name: Toggle fullscreen on the focused window, keys: $mod f, action: fullscreen}

- {name: Toggle floating on the focused window, keys: $mod t, action: floating}

- {name: Move window to the left, keys: $mod shift h, action: shuffle_left}

- {name: Move window to the right, keys: $mod shift l, action: shuffle_right}

- {name: Move window down, keys: $mod shift j, action: shuffle_down}

- {name: Move window up, keys: $mod shift k, action: shuffle_up}

- {name: Grow window to the left, keys: $mod control h, action: grow_left}

- {name: Grow window to the right, keys: $mod control l, action: grow_right}

- {name: Grow window down, keys: $mod control j, action: grow_down}

- {name: Grow window up, keys: $mod control k, action: grow_up}

- {name: Reset all window sizes, keys: $mod n, action: reset_windows}

# screenshot

- {name: Take a screenshot, keys: print, action: spawn_command, command: screenshot}

- {name: Take a screenshot of the full desktop, keys: $mod print, action: spawn_command,

command: screenshot_full}

# desktop

# desktop

- {name: Change wallpaper, group: settings, keys: $mod control w, action: spawn_command, command: change_wallpaper}

- {name: Desktop Settings, group: settings, keys: $mod control Escape, action: spawn_command, command: desktop_settings}

- {name: Keybindings help, group: settings, keys: $mod slash, action: dropdown, command: htop}

# options

# - { name: Spawn a command using a prompt widget, keys: $mod r, action: cmd }

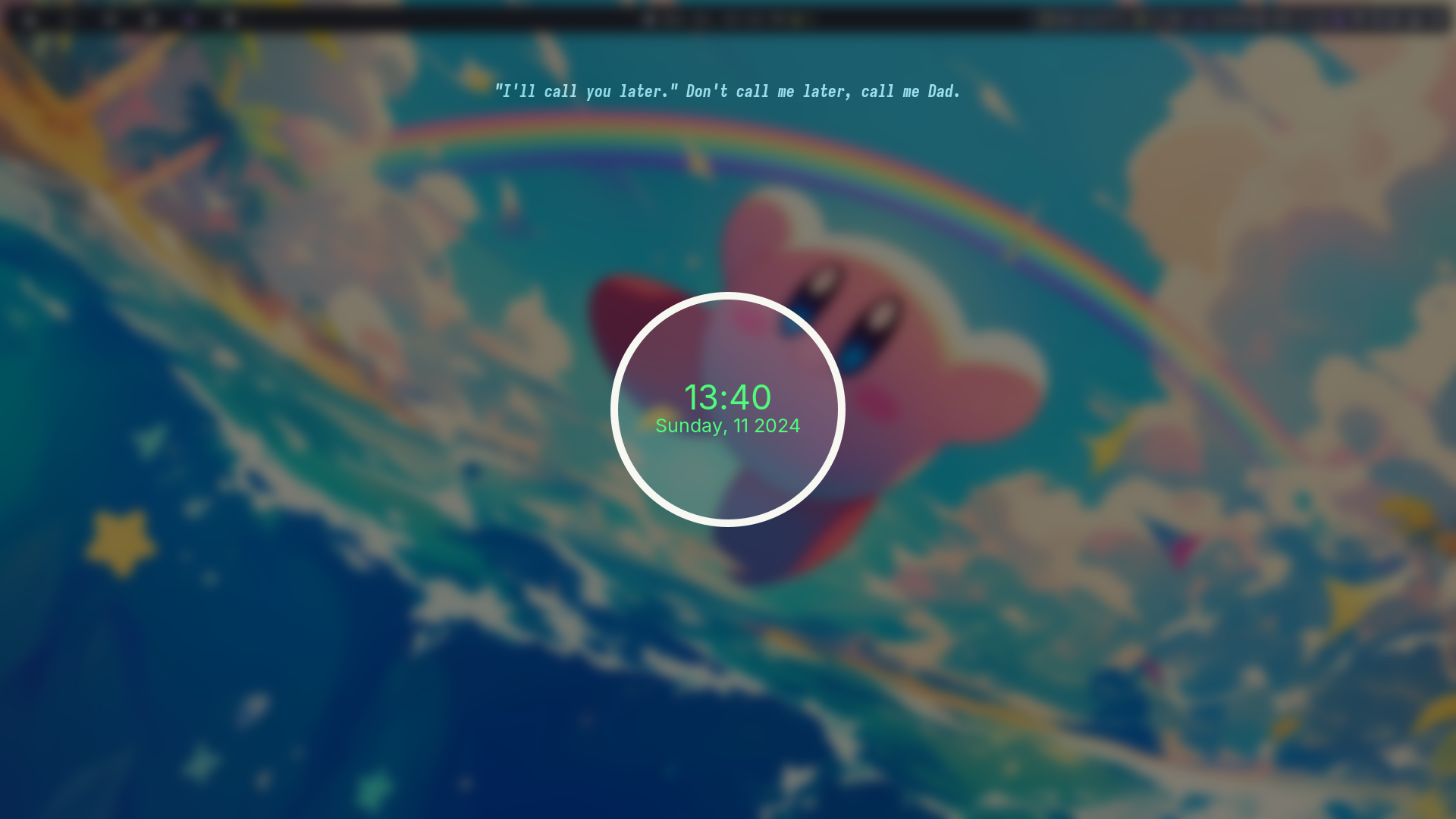

Lock screen

This section describes how to configure the lock screen customization built on top of i3-lock. The changes displays a lock with transparency from the current desktop and includes some IT jokes from Reddit or Icanhazdadjoke.

lock_screen:

command: ebenezer ui lock # default lock command

timeout: 10

font_size: 45

font: Inter ExtraBold

quote_font_size: 22

quote_font_path: /usr/share/fonts/inter/IosevkaNerdFontMono-ExtraBoldOblique.ttf

quote_font_path_alt: /usr/share/fonts/liberation/LiberationMono-Bold.ttf

joke_providers: reddit,icanhazdad

quote_foreground_color: '#7A1CAC'

quote_text_color: '#F5F7F8'

icanhazdad_joke_url: https://icanhazdadjoke.com/

reddit_joke_url: https://www.reddit.com/r/ProgrammerDadJokes.json

blurtype: '0x8'

I appreciate the idea to have random jokes on the lock screen. I was deeply inspired 🤩 by shinrai-dotfiles and created my own custom settings to make it flexible and performant.

Monitoring

You can assign colors for the threshold medium and high to your CPU and RAM, allowing you to easily detect when something is wrong in your system. 🤓 It's important to note that you can choose hex colors (#000) or defined colors (fg_normal, fg_red), which will be described in the next steps.

monitoring:

default_color: fg_normal

high_color: fg_orange

medium_color: fg_yellow

threshold_medium: 65

threshold_high: 85

burn: yes

Bar

Any desktop might have a bar, either at the top like MacOS, XFCE, Patheon or at the bottom like Windows, Deepin, Mint or Budgie. You may select, but it can't be right or left at the moment because I need to do some changes to this design to have a more appealing appearance, just like Ubuntu docks.

In addition to defining bar dimensions, you can choose and modify which widgets should appear in the bar in what order. Would be wonderful drag-and-drop design, but yaml works, trust me 🫶🏻🥹❤️🩹.

bar:

position: top

size: 34

widgets:

- type: group_box

margin_y: 3

margin_x: 3

padding: 0

borderwidth: 0

active: fg_normal

inactive: fg_normal

this_current_screen_border: bg_topbar_selected

this_screen_border: fg_blue

other_current_screen_border: bg_topbar_selected

highlight_color: bg_topbar_selected

highlight_method: text

foreground: fg_normal

rounded: false

urgent_alert_method: border

urgent_border: fg_urgent